At a glance

Expert's Rating

Pros

- Excellent performance in DX12/Vulkan games

- Best-in-class raw ray-tracing performance

- World’s first AV1 encoding, at a reasonable price

- Intel’s Limited Edition cooler design is cool, quiet, and attractive

- Inexpensive

Cons

- Lagging performance in DX11 games

- Bad performance without PCIe Resizable BAR active; you need a modern computer

- Buggy software

- XeSS works well, but only in a handful of games

Our Verdict

The Arc A770 Limited Edition is a graphics card that offers great value in some scenarios, but too many frustrating caveats to outright recommend. Despite those quibbles, it’s an encouraging start to Intel’s GPU ambitions.

Price When Reviewed

$329 (8GB) | $349 (16GB, reviewed)

Best Prices Today: Arc A770 Limited Edition

$349.99

After months—nay, years—of teasing and promises, a new era of graphics card competition is finally here. Today, we’re reviewing Intel’s first proper desktop graphics cards, the $289 Arc A750 and $329 Arc A770 Limited Edition. Sure, the entry-level Arc A380 already trickled onto store shelves, and Arc laptops appeared earlier this summer, but for PC gamers, the Arc A7-series launch is Intel’s first true challenge to the entrenched Nvidia/AMD duopoly.

Intel didn’t fully stick the landing. The company’s software woes are well-documented at this point and contributed to Arc launching a full year later than expected. Some rough edges remain, and Intel’s unique GPU architecture won’t perform to its full potential in every system, or in every game. But in the best-case scenarios, Intel’s Arc A750 and A770 deliver truly compelling value in a mid-range graphics market left largely unsatisfied by AMD and Nvidia during a debilitating years-long GPU shortage—and Arc’s initial ray tracing performance already outshines Nvidia’s second-gen RT implementation.

Should you buy Intel Arc? Chipzilla sent us its in-house “Limited Edition” versions of the Arc A750 and Arc A770 to find out. Let’s dig in.

Intel Arc A770 and A750 specs, features, and price

These debut Arc A7 graphics cards utilize Intel’s Xe XPG architecture, which we’ve already covered in depth. The flagship Arc A770 includes 32 “Alchemist” Xe cores and comes in 8GB ($329) and 16GB ($349) varieties. We’re testing Intel’s 16GB Arc A770 Limited Edition model, though the company says the 8GB model should deliver essentially the same performance in most games at the 1080p and 1440p resolutions that this GPU targets. The step-down $289 Arc A750 wields 28 Xe cores and is only available with 8GB of GDDR6 memory.

Intel

Intel’s Xe HPG architecture isn’t directly comparable to Nvidia or AMD’s architectures, so don’t get caught up trying to slice and dice GPU configurations between the rival lineups. It’s also worth pointing out that the clock speeds are an average of the expected frequencies hit during low-load and high-load tasks. Like all modern GPUs, Arc dynamically adjusts its clock speeds depending on what you’re doing.

There are some key Xe HPG (and thus Arc) nuances gamers need to be aware of, however. To kick things off on a positive note, Arc’s raw ray tracing power outshines even Nvidia’s vaunted RTX 30-series RT cores, though the company’s companion XeSS upscaling feature is in its infancy and only supported in a handful of titles, like Hitman 3, Death Stranding, and Shadow of the Tomb Raider. Nvidia’s next-gen GeForce RTX 4090 launches the very same day as Arc, however—albeit for a staggeringly higher price—with absolutely massive ray tracing uplifts of its own.

Intel also beat Nvidia and AMD to the punch with AV1 encoding support in Arc’s media engine. It’s a massive feather in Intel’s cap. Intel is famous for its media prowess (QuickSync, anyone?) and the streaming industry is pushing hard for AV1 to become the new standard. Lower bandwidth costs, higher-quality video, and easier interoperability for whatever the future of streaming media might hold makes it a mighty enticing bit of tech indeed.

We’ve already covered Intel Arc’s AV1 performance in-depth, crowning it “the future of GPU streaming.” Nvidia’s RTX 40-series will put up a fight of its own with not one, but two separate AV1 encoders in its media engine, but we don’t expect to see more mainstream-priced next-gen GeForce GPUs until well into 2023, so Arc should be the only AV1 option in town for video creators on a budget for the foreseeable future.

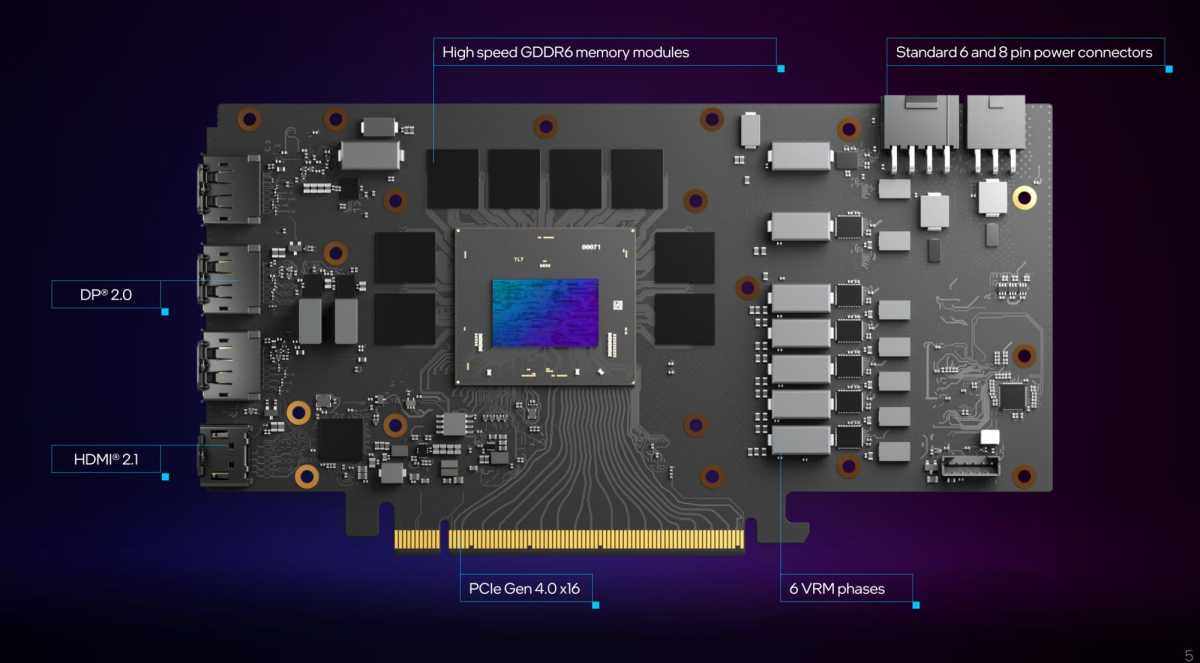

Intel

Every Arc GPU can support up to four total HDMI 2.0b and DisplayPort 2.0 outputs, one of which can actually be an HDMI 2.1 output with the integration of an additional controller called PCON. They’re capable of outputting up to 360Hz at 1080p and 1440p resolution or powering a pair of 4K/120 or 8K/60 panels. The DisplayPorts also support variable refresh-rate monitors, but since Intel opted to outfit Arc with HDMI 2.0b rather than 2.1, your adaptive sync display won’t work as intended there unless those PCON tweaks are made. Intel’s own Arc A770 and A750 Limited Edition come with an extra HDMI 2.1 controller, so your variable refresh rate monitor will work as intended there with these specific cards.

Intel rolled out some enticing software features to support Arc’s launch. We covered them in-depth when Arc debuted in laptops, but here’s a summary:

- XeSS is essentially Intel’s rival to Nvidia’s DLSS, upscaling your image using machine learning run on dedicated XMX cores. It’s impressive, but only currently available in very few games.

- Arc Control is Intel’s new graphics control panel, with all the basic features you’d expect: Performance monitoring and tuning, driver and game management, streaming options, an overlay, and more.

- Smooth Sync helps reduce the impact of screen-tearing in high frame rate games (read: e-sports) by applying a lightweight dithering filter where the two frames “tear” on-screen, transforming the typically jarring harsh line into a much less noticeable, slightly blurred area.

Intel

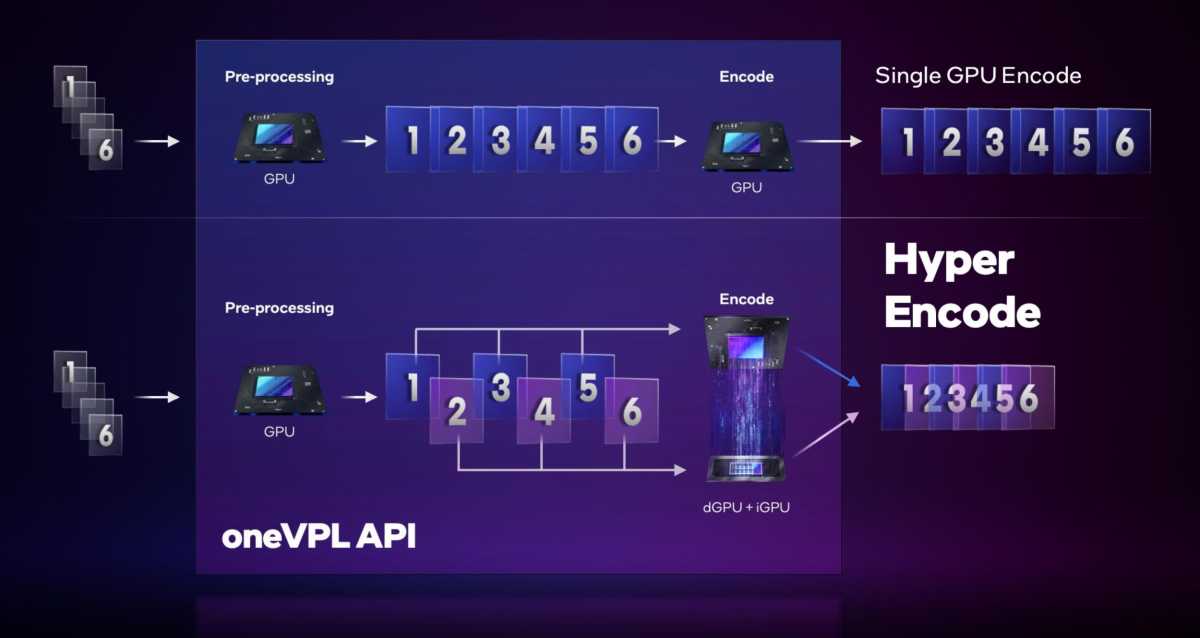

Intel played up “Deep Link” capabilities in Arc laptops, which can leverage the power of the integrated GPUs inside of Intel Core processors to have them work in tandem on compute and creation tasks, but Intel representatives say those technologies hold less importance on desktops, since desktop GPUs are so much more potent. These are gaming GPUs through and through, is Intel’s messaging. That said, Intel Fellow Tom Petersen says Hyper Encode—which puts the discrete and integrated GPUs to work on the same encoding task to supercharge speeds—remains effective on desktops, assuming you’re running an Intel Core-based PC rather than a Ryzen CPU.

This initial review won’t delve into those nifty Intel features, alas, as we had limited time to test and wanted to focus our attention on some Arc quirks that are worth delving into.

Intel Arc caveats: Resizable BAR, DX12, and drivers

It’s not all sunshine and rainbows though. Intel built Arc for the games of tomorrow and optimizing for that future makes Arc’s performance suffer on systems and games that aren’t riding the bleeding edge.

Brad Chacos/IDG

Those Xe XPG cores excel at ray tracing, and in modern games built on the newer DirectX 12 and Vulkan graphics APIs. Many (if not most) triple-A games from studios like EA, Ubisoft, and Microsoft now utilize those newer technologies, and most of the hit e-sports games have introduced alternative DX12 or Vulkan modes, but many indie games and “double-A” titles are still crafted with DirectX 11. Most of the older games in your Steam backlog probably run DX11 or even DX9, too. Arc runs significantly slower on those, but still at an acceptable clip, and Intel (mostly) priced these graphics cards to reflect those worst-case performance scenarios—meaning the Arc A770 and A750 can smash the RTX 3060 in modern games, as our benchmarks will reveal. Intel also pledges to continue working to improve gaming performance across-the-board on Arc graphics cards.

You’ll also need a modern desktop computer capable of running PCIe Resizable BAR. “ReBAR,” as it’s called by enthusiasts, lets your CPU access your GPU’s entire memory framebuffer, rather than accessing it in tiny 256MB chunks. It can provide a slim-to-moderate performance uplift on GeForce and Radeon cards, but it’s a virtual necessity for Arc due to the way Intel constructed its memory controller. Running Arc on a system without ReBAR results in substantially lower average frame rates and noticeably more stuttering, which Intel is blunt about: “If you don’t have PCIe ReBAR, go get a 3060,” Intel Fellow Tom Petersen said flat-out in a briefing with reporters, and Intel’s Arc Control software will pop up a warning if it’s installed on a system without ReBAR active, encouraging you to turn it on.

We tested the Arc A770 with ReBAR both on and off across our entire games suite so you can see the difference for yourself, but spoiler: Tom’s right. Unfortunately, that means Arc isn’t a great option for turning an older PC into a gaming rig despite its affordable price. AMD kicked off the PCIe Resizable BAR era with the introduction of “Smart Access Memory,” its special-sauce implementation, in its Ryzen 5000-series processors and 500-series motherboards. Intel followed suit with its 12th-gen Core processors. Both companies then extended the technology backwards, to Ryzen 3000 and as far as 10th-gen Core, but activating it requires updating the firmware of your motherboard and CPU alike. It’s not a hard procedure, but it may be beyond the technical aptitude of some would-be Arc buyers.

Brad Chacos/IDG

Finally, the elephant in the room: drivers.

Intel’s Arc was supposed to come out a long time ago but suffered from delays after the company discovered that scaling up its work in integrated GPUs to much more performant discrete GPUs didn’t work as planned. Earlier this year, the low-end Arc A380 launched in China with atrocious software glitches. Ominous stuff.

Arc drivers are in much better shape now. They provide a satisfying experience much more often than not. That said, I did encounter several minor hiccups during my testing:

- The first time I installed Arc Control, it completely locked up my computer, forcing me to perform a hard reboot. The issue didn’t reoccur, however, though Arc Control occasionally felt laggy in use.

- Every time I turned on my computer, Windows spawned a prompt asking me if I’d like to allow Arc Control to run. Yes, I still do. This minor annoyance isn’t an issue with GeForce or Radeon drivers, but Intel says it’s already working to fix it.

- I suffered one crash (a fatal exception error) in Gears Tactics, across roughly 20 different benchmark runs.

- I suffered one hard crash that required a hard reboot in Shadow of the Tomb Raider, across roughly 40 benchmark runs.

- I suffered graphical corruption and an unresponsive keyboard/mouse in the main menu of Borderlands 3, across roughly 20 different benchmark runs.

All of these were irritating, but none of them were dealbreakers. It’s also worth illustrating a list of issues that Intel fixed during my brief testing time with these Arc GPUs:

- Fortnite failure to launch

- Valorant failure to launch

- Battlefield 2042 app crash in DX12 Game Mode

- Spider-Man Remastered crash when loading with ray traced reflections enabled

- Hitman 3 DX12 corruption in Training Mode

- Saints Row corruption

- YouCam9 crash after changing the scene

Collectively, this information drives home some key points: Intel still has work to do to catch up to the smooth software experience provided by Nvidia and AMD, both of which have been improving their drivers for decades at this point. But Arc’s drivers are in much better shape than they were when the Arc A380 launched months ago, and Petersen says Intel has “thousands” of software engineers working on improving the Arc driver experience.

Expect a somewhat bumpier ride during these early days—but engineers are working to fix those lingering woes, and Intel priced the Arc A750 and Arc A770 to reflect these concerns. Whether you’re willing to accept those bumps and bruises in an affordable first-gen graphics card is up to you.

Intel Arc A770 and A750 Limited Edition design

Brad Chacos/IDG

We’re reviewing Intel’s own “Limited Edition” versions of the Arc A770 and A750, which—despite their name—aren’t limited whatsoever. Think of it along the lines of Nvidia’s “Founders Edition” branding.

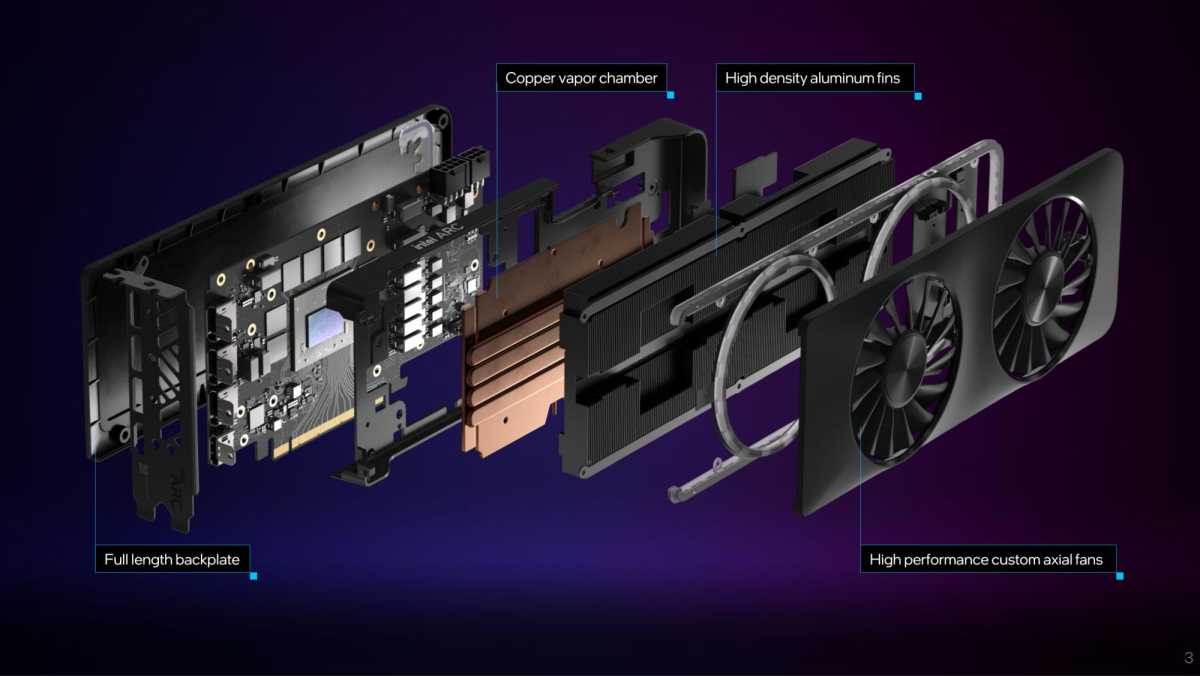

They’re impressively built, offering a clean, black aesthetic and more heft in your hand than rival Radeon RX 6600 and RTX 3060 offerings. While many high-end GPUs are resorting to gargantuan three- or four-slot coolers with exotic cutouts and fans galore, the Arc Limited Edition cards stick to a manageable two slots and sport a shroud and backplate combo that fully wraps around the card, punctuated by a pair of fans bristling with blades. Both cards look functionally identical when powered off, right down to identical 8+6-pin power connectors, but the flagship Arc A770 Limited Edition includes a fetching border of 90 controllable (blue default) RGB LEDs that look pretty damned good in practice thanks to a mild diffusion effect. As a whole, these Limited Edition cards feel incredibly premium in your hand.

Rather than spew endless words about the design, here are some images from Intel’s review materials that show its construction. In practice, these cards run quiet and cool though we did discern some very faint coil whine in our A770 unit. That’s not atypical, however, and it wasn’t especially noticeable outside of game menus, which tend to invoke coil whine due to their excessively high frame rates.

Intel

Intel

Intel

Intel’s Arc A770 Limited Edition comes equipped with 16GB of memory, doubling the standard 8GB. It costs $349, a very reasonable $20 upcharge over the base model. These in-house Intel designs are good—a refreshing development as bigger custom graphics card makers have yet to reveal Arc offerings, though we’ve seen custom Arc cards from the likes of Acer and Gunnir. Intel says the Limited Edition models will be available on October 12 from traditional retail outlets, though the company intends to sell them directly in the future, similar to how AMD sells reference Radeon 6000-series GPUs.

Phew! Enough words. Let’s get to the benchmarks.

Our test system

We use an AMD Ryzen 5000-series test rig to be able to benchmark the effect of PCIe 4.0 support on modern GPUs, as well as the performance-boosting PCIe Resizable BAR features. Most of the hardware was provided by the manufacturers, but we purchased the storage ourselves.

- AMD Ryzen 5900X, stock settings

- AMD Wraith Max cooler

- MSI Godlike X570 motherboard

- 32GB G.Skill Trident Z Neo DDR4 3800 memory

- EVGA 1200W SuperNova P2 power supply ($352 on Amazon)

- 1TB SK Hynix Gold S31 SSD

We’re comparing the $289 Intel Arc A750 LE and $349 Intel Arc A770 LE against its most direct rivals. The ostensibly $330 Nvidia GeForce RTX 3060 is going for closer to $380 to $400 on the streets now that the cryptocurrency bubble has finally burst and you can buy graphics cards again. AMD’s ostensibly $330 Radeon RX 6600, on the other hand, can often be found for $250 to $280 on the streets. It’s a solid value and the best 1080p graphics card you can currently buy, though Arc aims to upset that. Since PCIe Resizable BAR is such a big part of Arc’s performance story, we’ve also included full benchmarks results for the A770 showing ReBAR both on and off.

We test a variety of games spanning various engines, genres, vendor sponsorships (Nvidia, AMD, and Intel), and graphics APIs (DirectX 11, DX12, and Vulkan). Each game is tested using its in-game benchmark at the highest possible graphics presets unless otherwise noted, with VSync, frame rate caps, real-time ray tracing or DLSS effects, and FreeSync/G-Sync disabled, along with any other vendor-specific technologies like FidelityFX tools or Nvidia Reflex. We’ve also enabled temporal anti-aliasing (TAA) to push these cards to their limits. We run each benchmark at least three times and list the average result for each test.

This suite leans more heavily on DX12 and Vulkan titles, as many newer games use those graphics APIs. That means it won’t reveal the depths of Intel Arc’s lagging DirectX 11 performance, but we’ve included a separate section afterward that compares Arc’s frame rate in games that support both DX11 and DX12. We also tested the Arc A770 fully with PCIe Resizable BAR both on and off, since it’s a crucial part of Intel’s performance story. You’ll find separate listings for those configurations in the charts below.

Gaming performance benchmarks

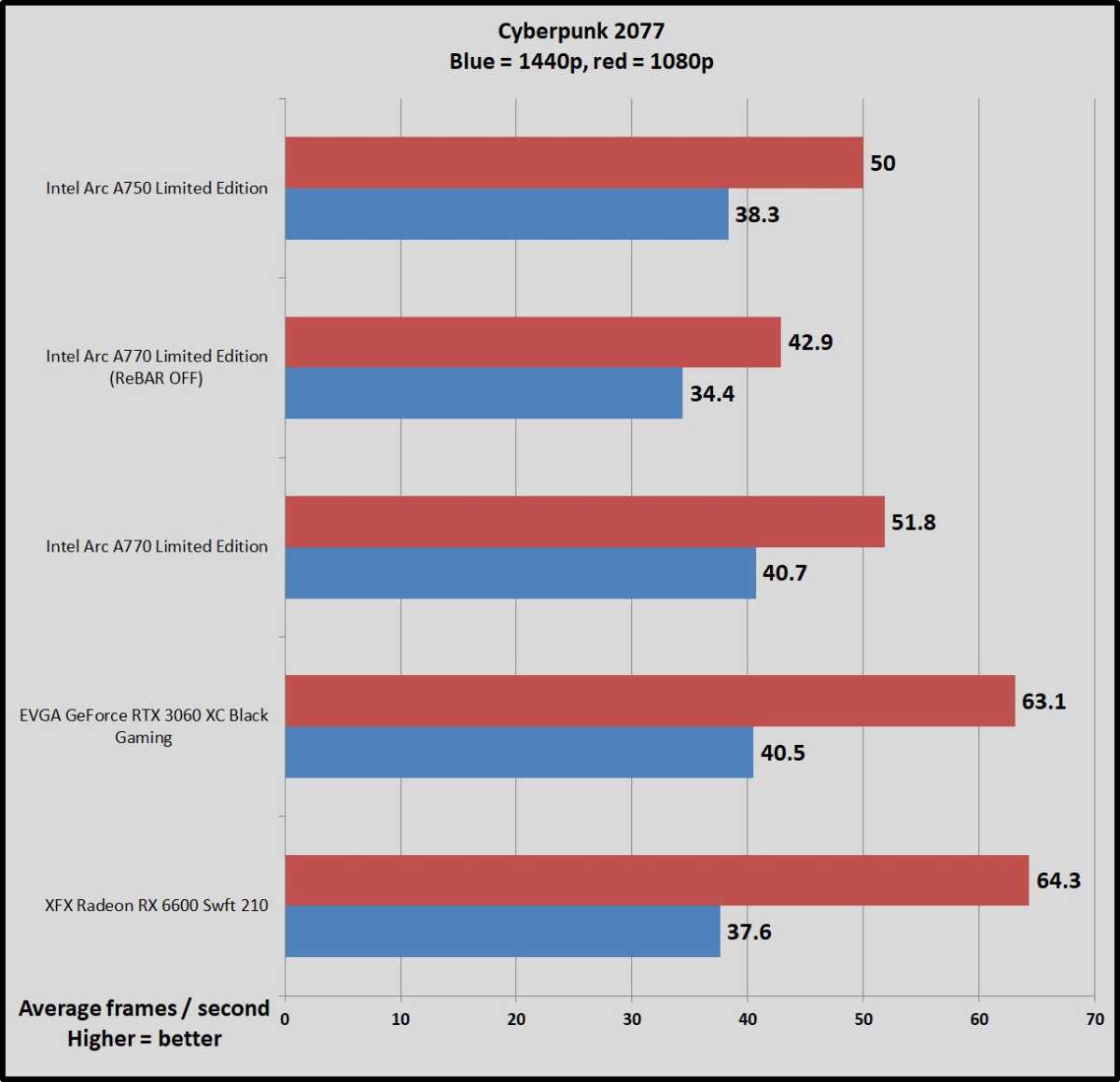

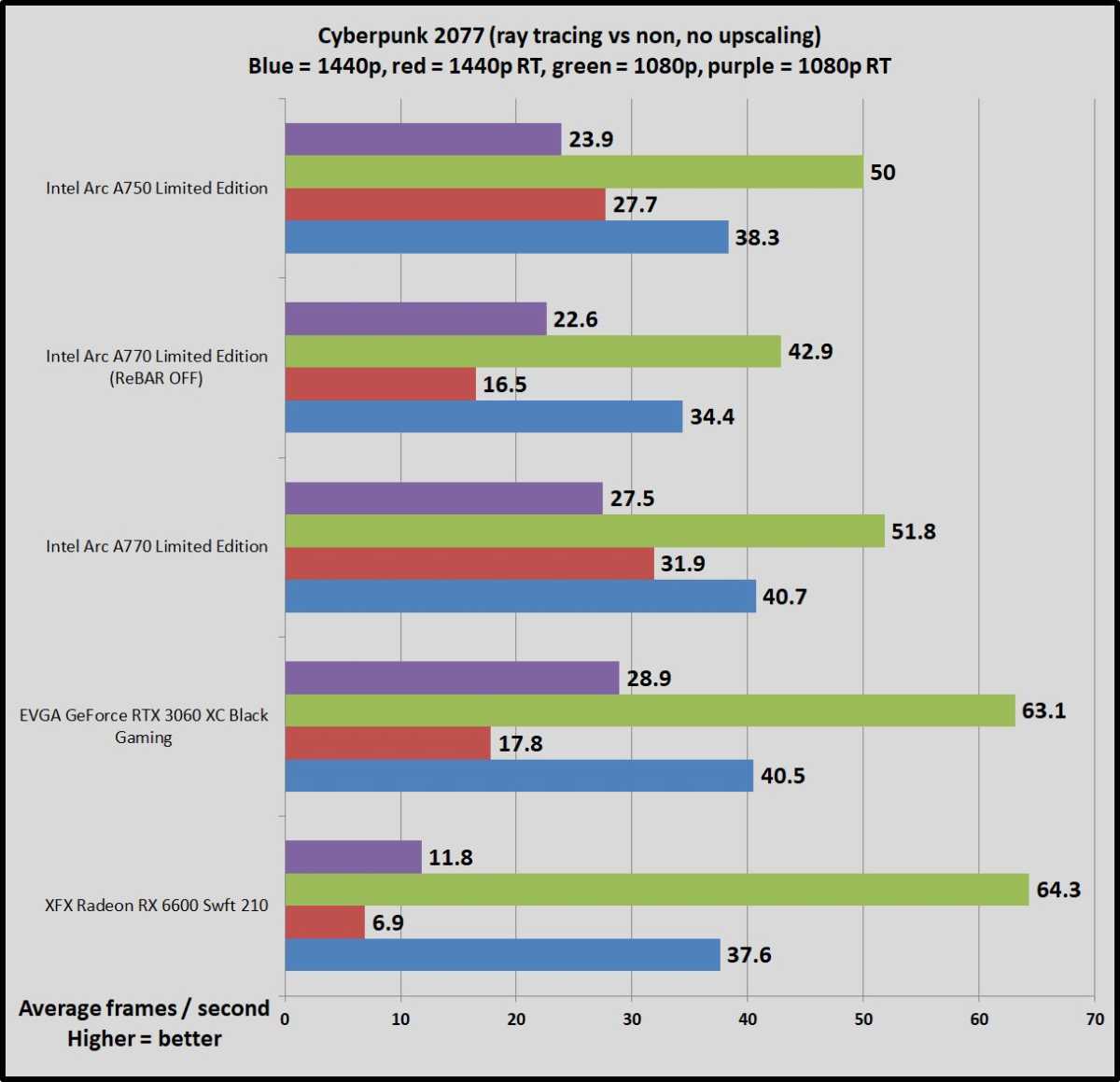

Cyberpunk 2077

Cyberpunk 2077 got off to a rocky start, but it’s enjoying a renaissance after years of fixes (and a dope new Netflix anime). It also remains a graphical DX12 powerhouse capable of melting even the most potent GPUs in the right scenario, especially with cutting-edge ray tracing effects enabled.

Brad Chacos/IDG

AMD and Nvidia have clearly had more time to polish up their drivers for peak Cyberpunk performance, though Arc turns in a respectable showing. Neither Arc card sniffs at the hallowed 60fps mark using the Ultra settings we deploy during testing, but anecdotally, dropping the graphics options down to high provided much smoother gameplay—at least with ReBAR on. Arc GPUs suffer from increased stuttering with ReBAR off, which is partially reflected in the ReBAR-off A770’s tanking average frame rate in these charts.

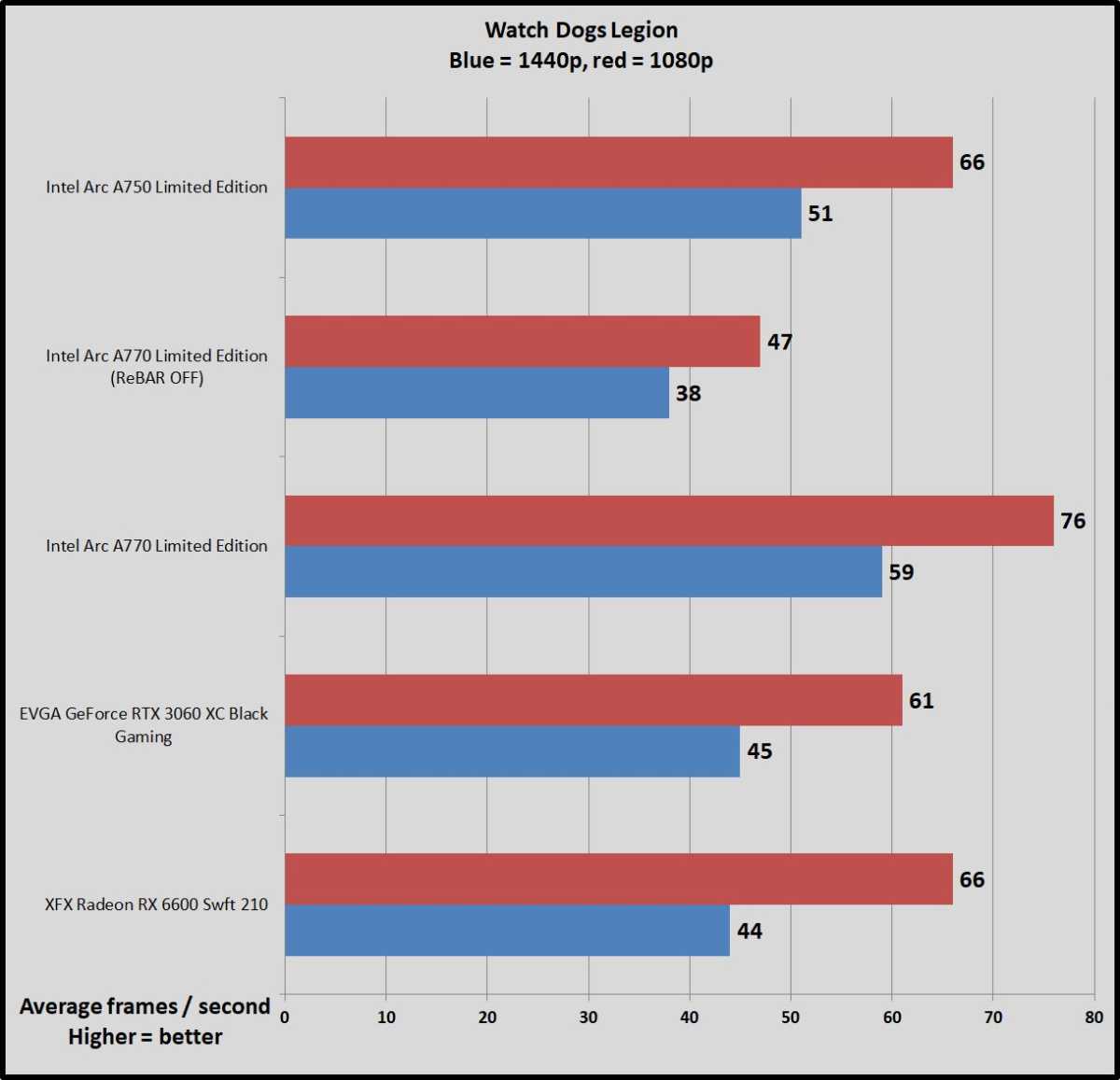

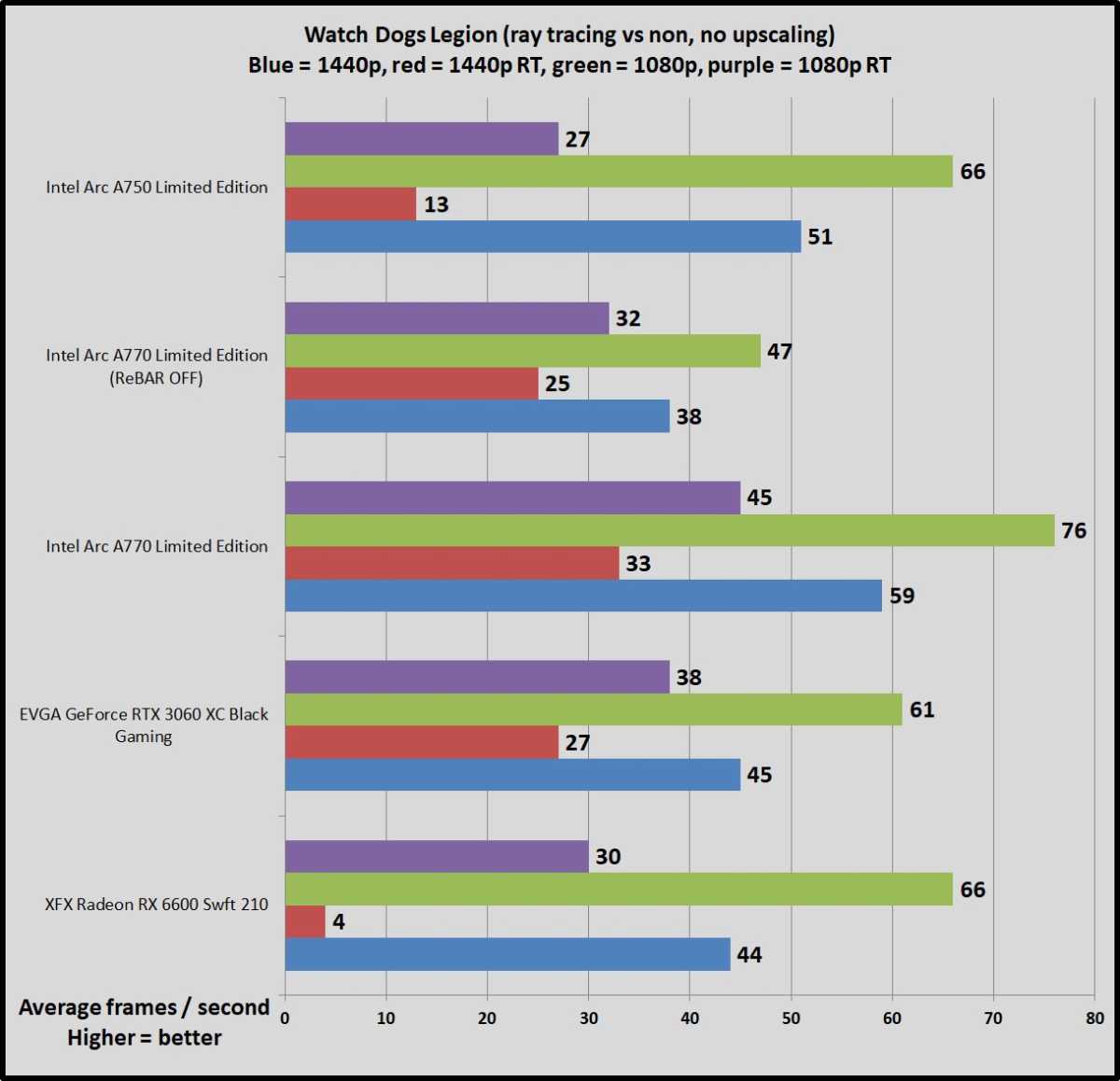

Watch Dogs: Legion

Watch Dogs: Legion is one of the first games to debut on next-gen consoles. Ubisoft upgraded its Disrupt engine to include cutting-edge features like real-time ray tracing and Nvidia’s DLSS. We disable those effects for this testing, but Legion remains a strenuous game even on high-end hardware with its optional high-resolution texture pack installed. It can blow past the 8GB memory capacity offered in most mid-range cards even at 1080p with enough graphical bells and whistles enabled, making the Arc A770 Limited Edition and RTX 3060 particularly well-suited to this game thanks to their higher VRAM.

Brad Chacos/IDG

The Radeon RX 6600, bolstered by AMD’s awesomely large “Infinity Cache” for increased performance at lower resolutions, surpasses the RTX 3060 here. The $289 Arc A750 manages to tie it at 1080p and overtake it at 1440p (where the Infinity Cache is much less effective), but the Arc A770 Limited Edition is the true star here, leaving all other GPUs in the dust.

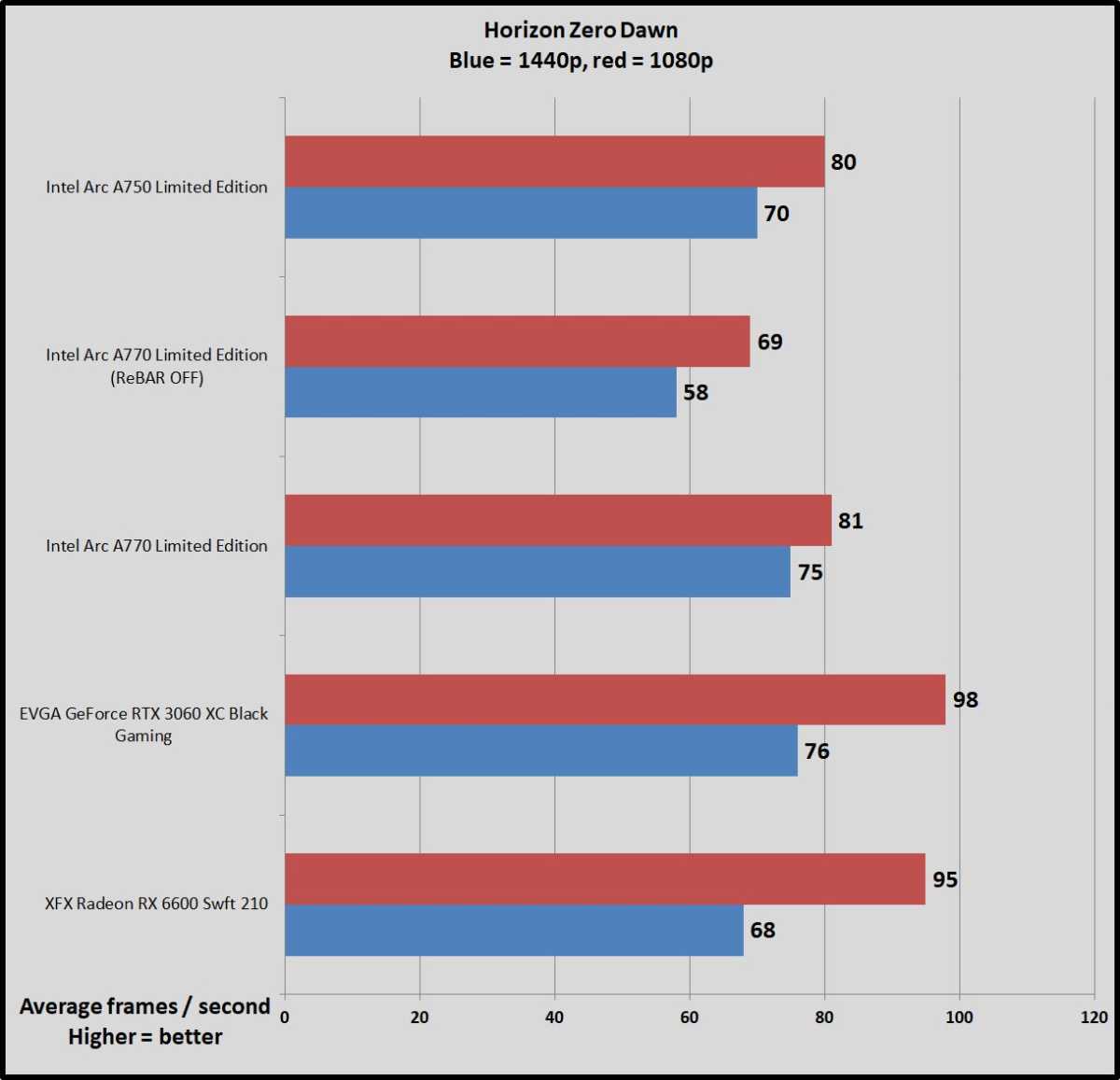

Horizon Zero Dawn

Yep, PlayStation exclusives are coming to the PC now. Horizon Zero Dawn runs on Guerrilla Games’ Decima engine, the same engine that powers Death Stranding.

Brad Chacos/IDG

HZD runs on DirectX 12, but still represents basically the low-water mark for Arc in these benchmarks. It’s well behind Nvidia and AMD here (with Infinity Cache once again doing work), but still delivers a fine gameplay experience well in excess of 60fps at 1080p, and around 60fps at 1440p.

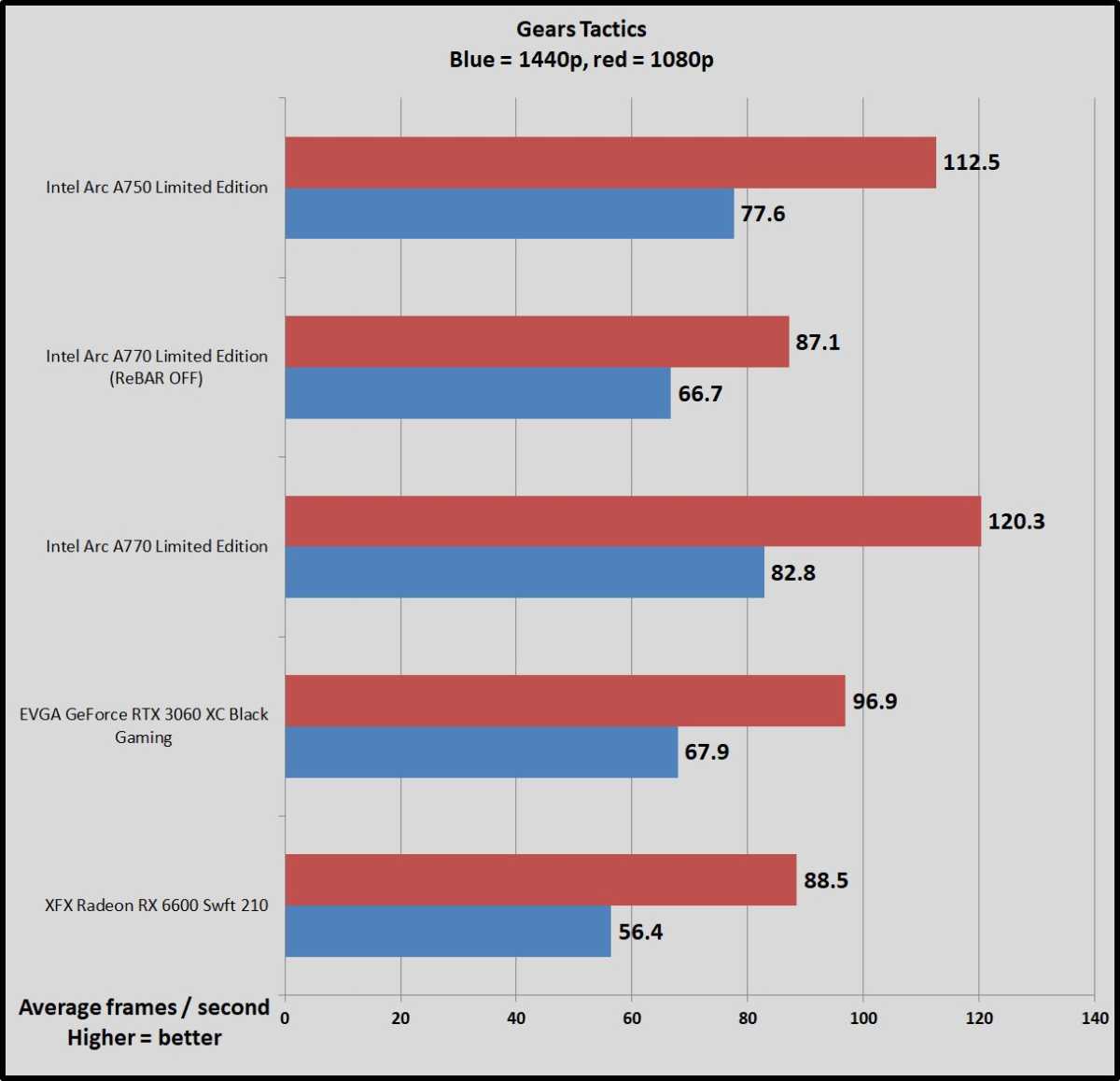

Gears Tactics

Gears Tactics puts its own brutal, fast-paced spin on the XCOM-like genre. This Unreal Engine 4-powered game was built from the ground up for DirectX 12, and we love being able to work a tactics-style game into our benchmarking suite. Better yet, the game comes with a plethora of graphics options for PC snobs. More games should devote such loving care to explaining what flipping all these visual knobs mean.

You can’t use the presets to benchmark Gears Tactics, as it intelligently scales to work best on your installed hardware, meaning that “Ultra” on one graphics card can load different settings than “Ultra” on a weaker card. We manually set all options to their highest possible settings.

Brad Chacos/IDG

…and here’s one of Arc’s better showings. Both the A750 and the A770 absolutely smash the RTX 3060 and Radeon RX 6600 here, by a very healthy margin.

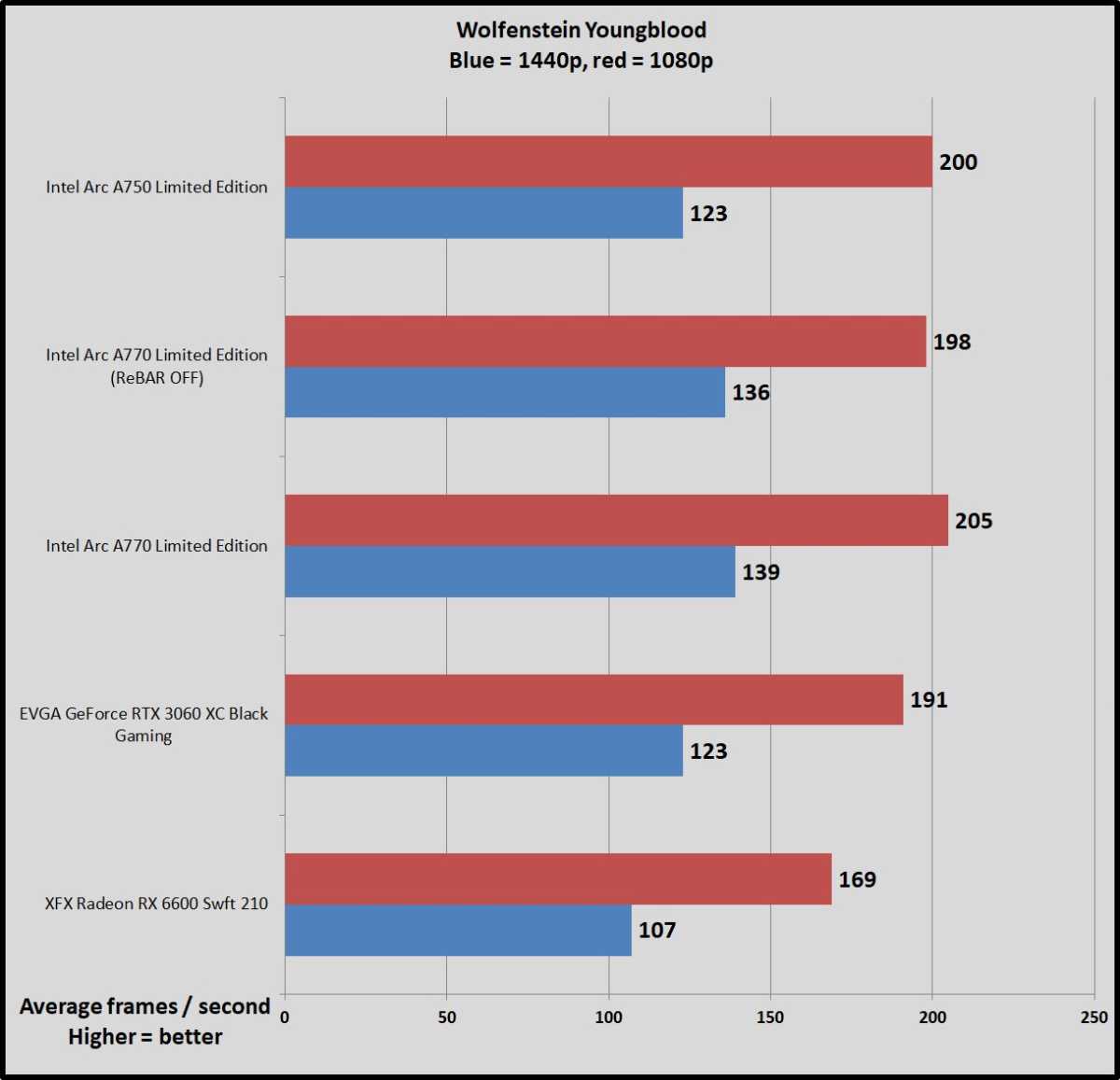

Wolfenstein Youngblood

Wolfenstein: Youngblood is more fun when you can play cooperatively with a buddy, but it’s a fearless experiment—and an absolute technical showcase. Running on the Vulkan API, Youngblood achieves blistering frame rates, and it supports all sorts of cutting-edge technologies like ray tracing, DLSS 2.0, HDR, GPU culling, asynchronous computing, and Nvidia’s Content Adaptive Shading. The game includes a built-in benchmark with two different scenes; we tested Riverside.

Brad Chacos/IDG

Despite all that love for Nvidia, both the Arc A750 and A770 manage to outperform it—barely, in the case of the A750, and by roughly 10 percent in the case of the A770. It’s another strong Intel showing.

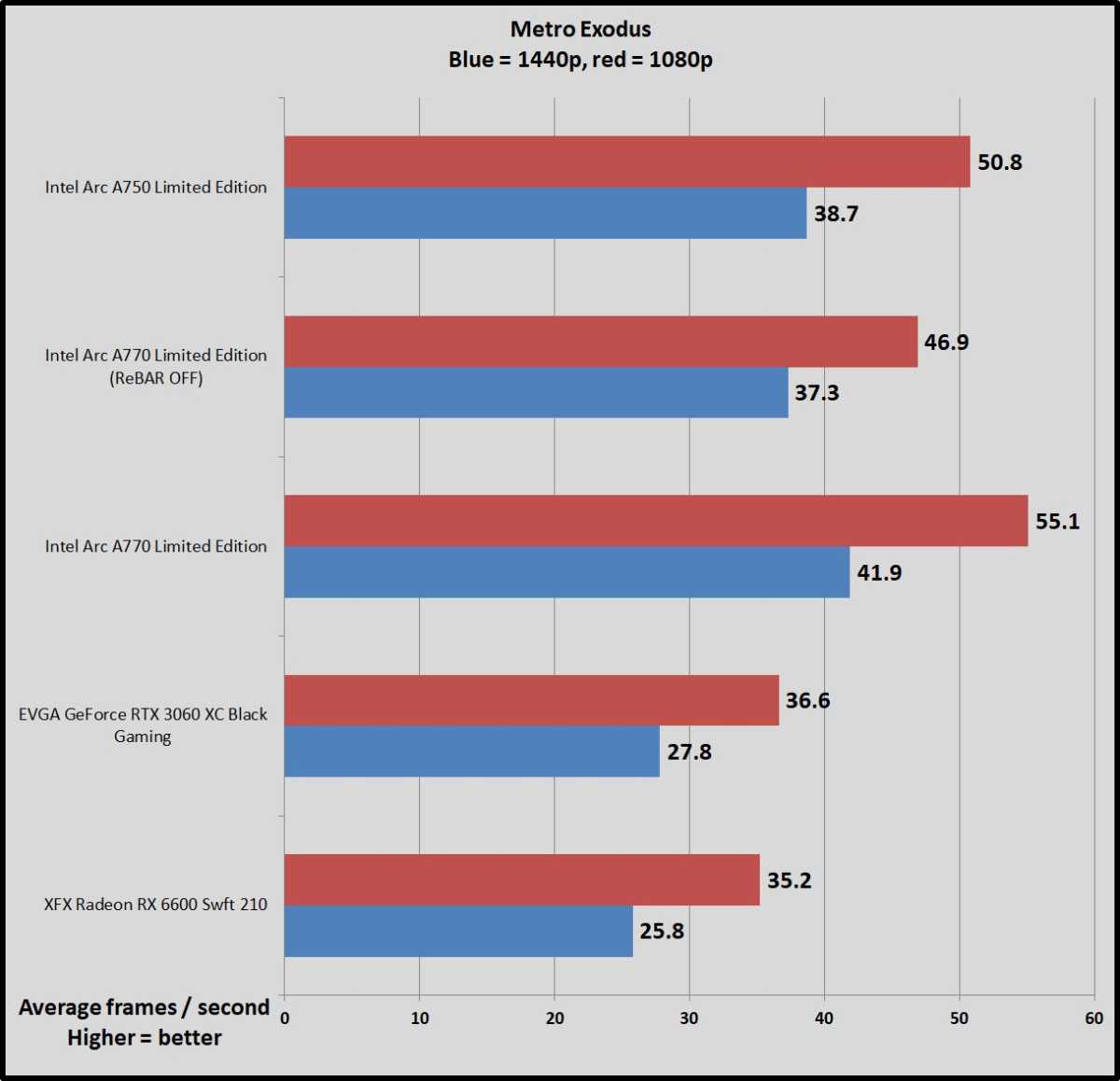

Metro Exodus

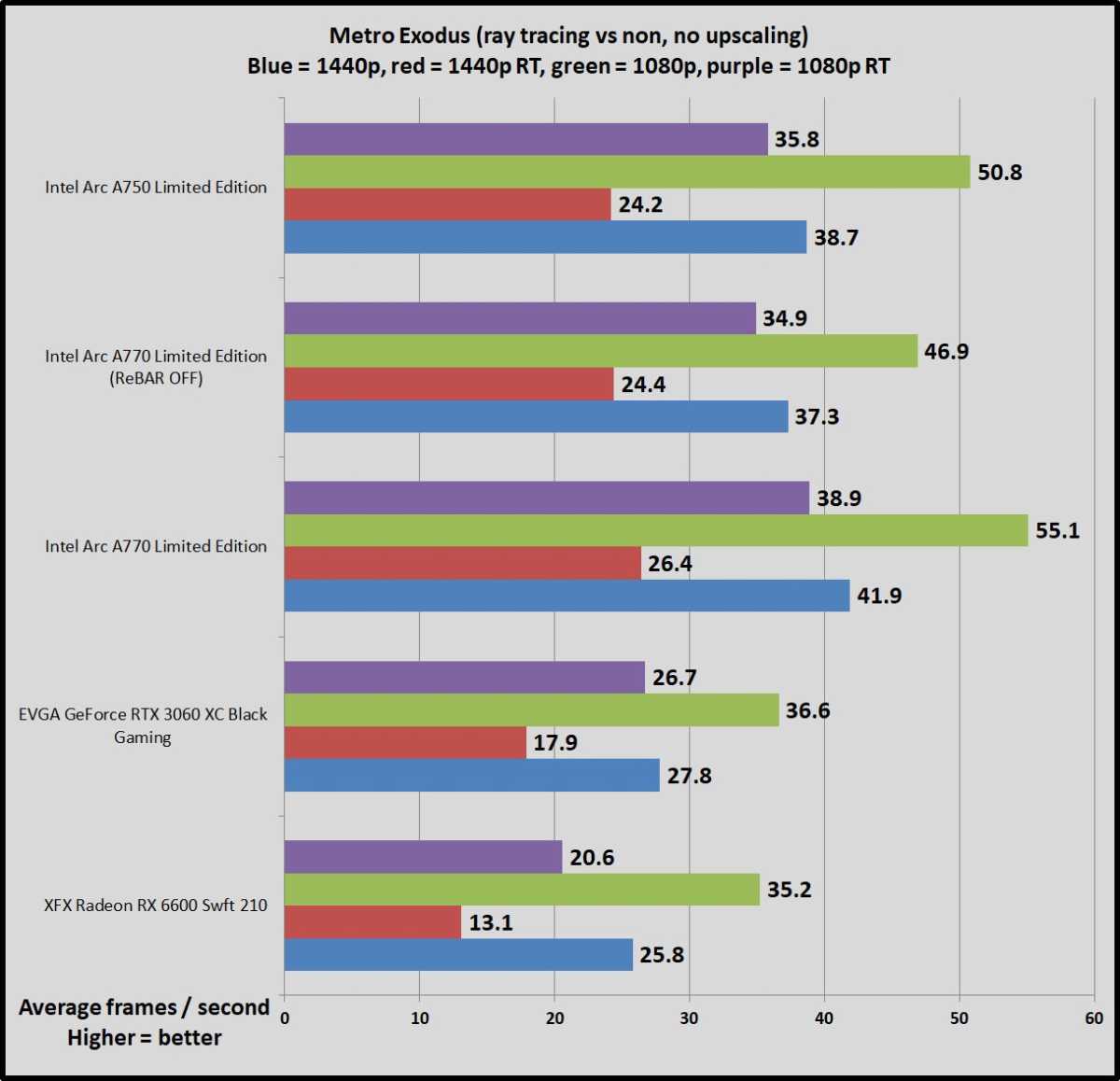

Metro Exodus remains one of the best-looking games around. The latest version of the 4A Engine provides incredibly luscious, ultra-detailed visuals, with one of the most stunning real-time ray tracing implementations released yet. The Extreme graphics preset we benchmark can melt even the most powerful modern hardware, as you’ll see below, though the game’s Ultra and High presets still look good at much higher frame rates. We test in DirectX 12 mode with ray tracing, Hairworks, and DLSS disabled.

Brad Chacos/IDG

It’s another resounding victory for Intel Arc. In modern games where Arc wins, Arc really wins.

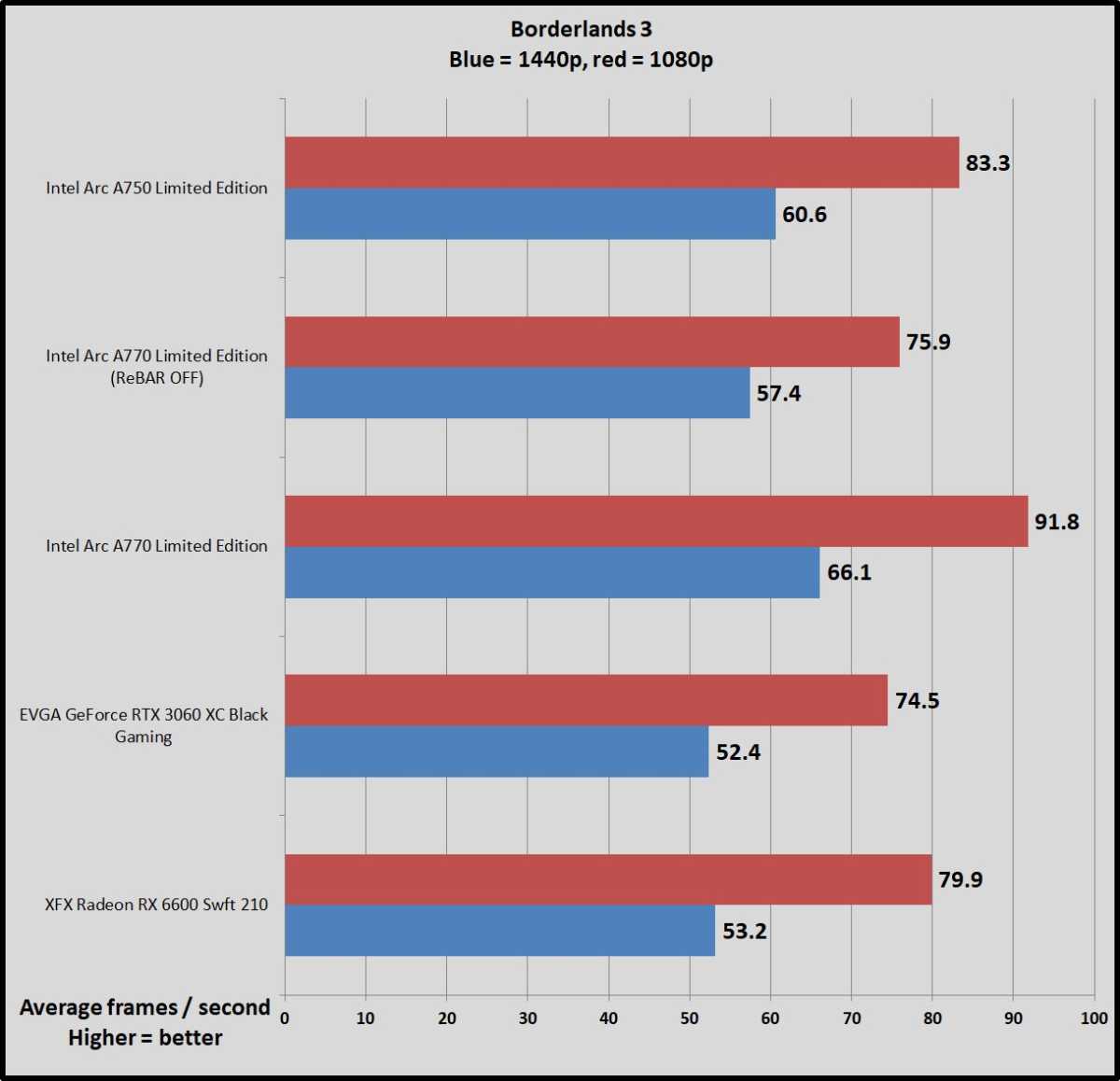

Borderlands 3

Borderlands is back! Gearbox’s game defaults to DX12, so we do as well. It gives us a glimpse at the ultra-popular Unreal Engine 4’s performance in a traditional shooter.

Brad Chacos/IDG

This game tends to favor AMD hardware over GeForce…but as these benchmarks show, it favors Intel’s Arc even more.

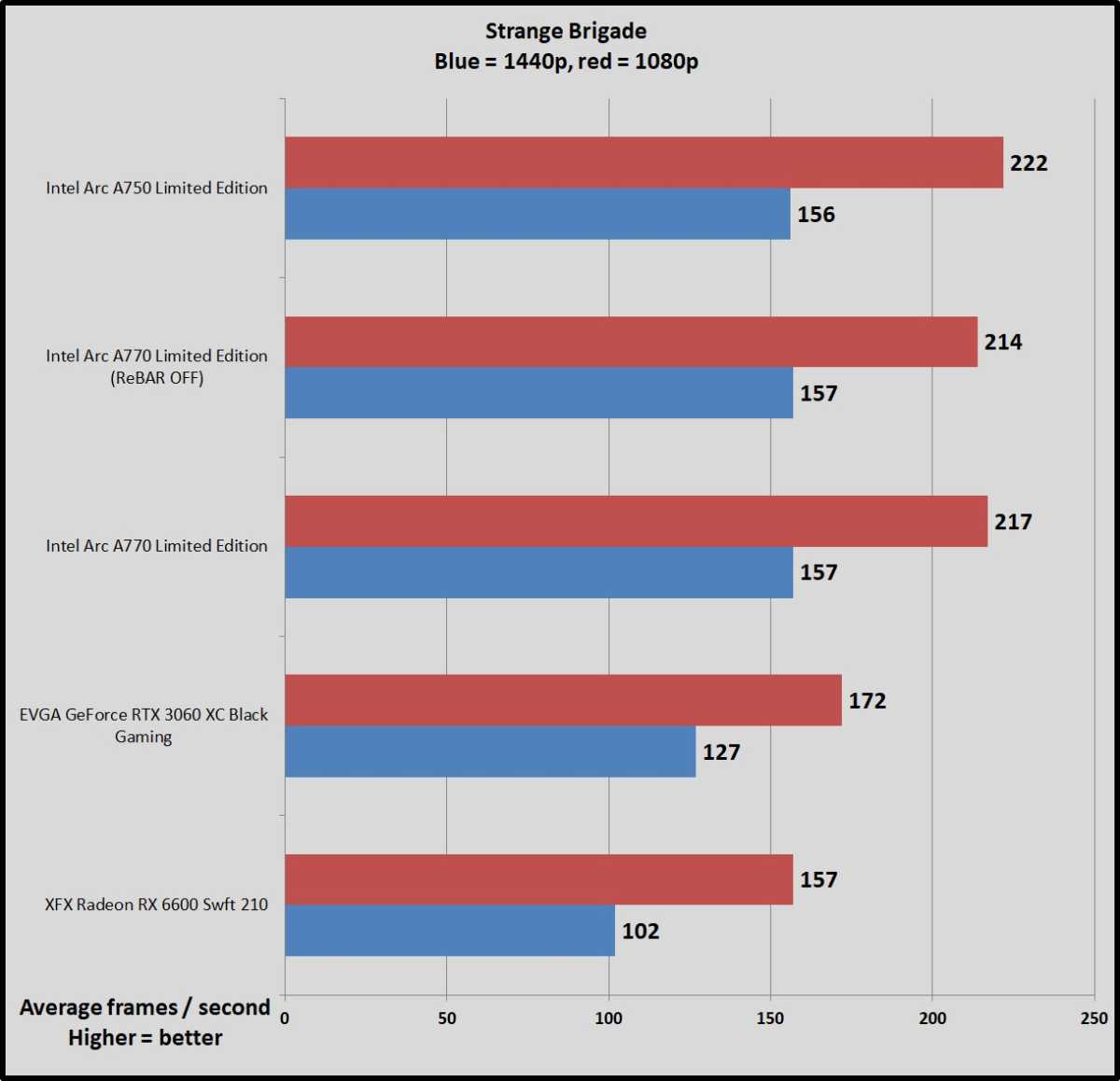

Strange Brigade

Strange Brigade is a cooperative third-person shooter where a team of adventurers blasts through hordes of mythological enemies. It’s a technological showcase, built around the next-gen Vulkan and DirectX 12 technologies and infused with features like HDR support and the ability to toggle asynchronous compute on and off. It uses Rebellion’s custom Azure engine. We test using the Vulkan renderer, which is faster than DX12.

Brad Chacos/IDG

This game isn’t heavily played these days, but if you still rock it, Intel Arc is the clear way to go. It delivers another impressive victory.

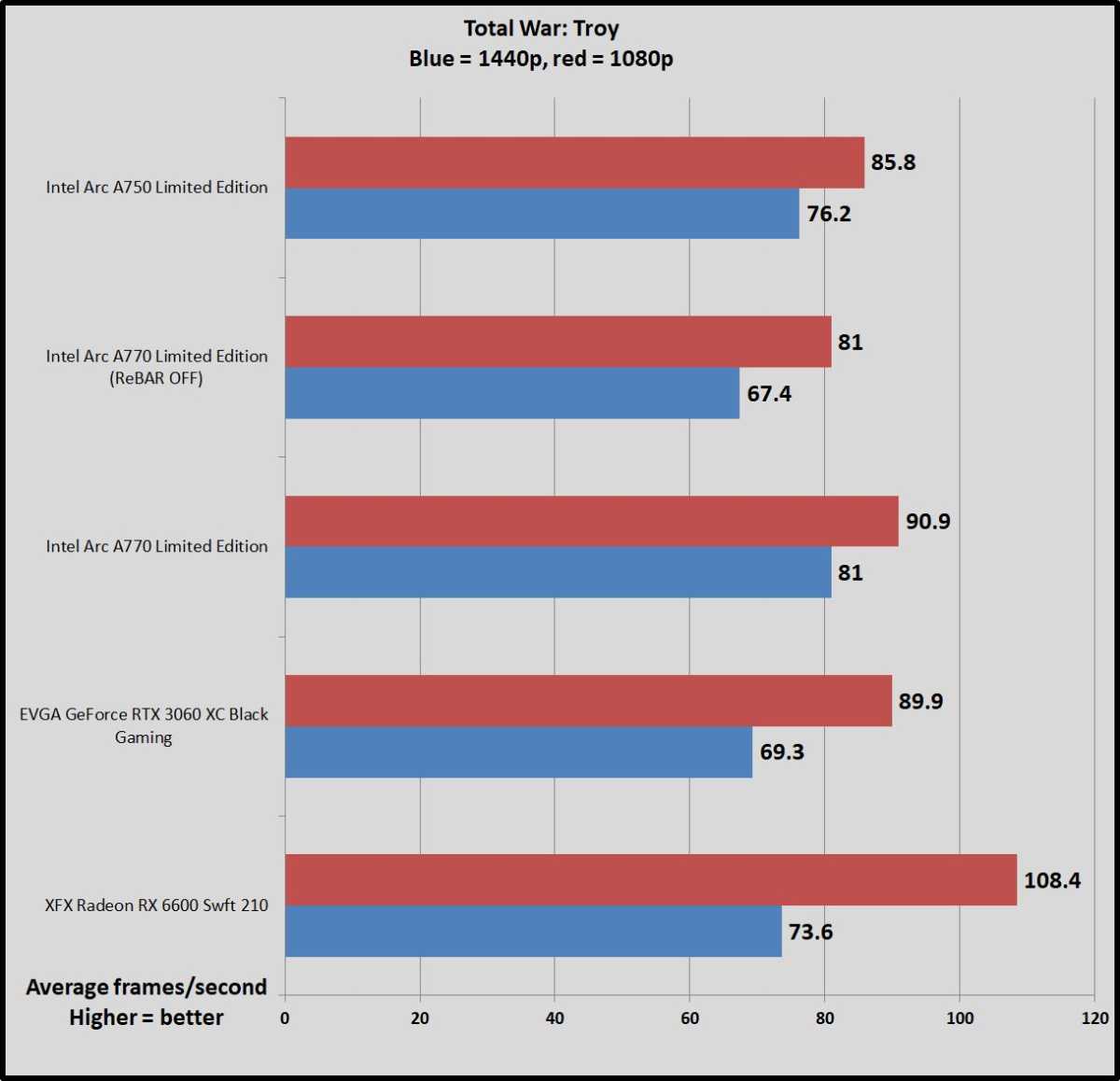

Total War: Troy

The latest game in the popular Total War saga, Troy was given away free for its first 24 hours on the Epic Games Store, moving over 7.5 million copies before it went on proper sale. Total War: Troy is built using a modified version of the Total War: Warhammer 2 engine, and this DX11 title looks stunning for a turn-based strategy game. We test the more intensive battle benchmark.

Brad Chacos/IDG

DX11 is a weak spot for Arc, but it manages to hold its own here. Intel’s GPUs come in last place at 1080p resolution, with the Radeon RX 6600’s Infinity Cache helping it blaze ahead. But the tables flip at 1440p resolution, where the Arc A770 actually takes a comfortable lead over all others.

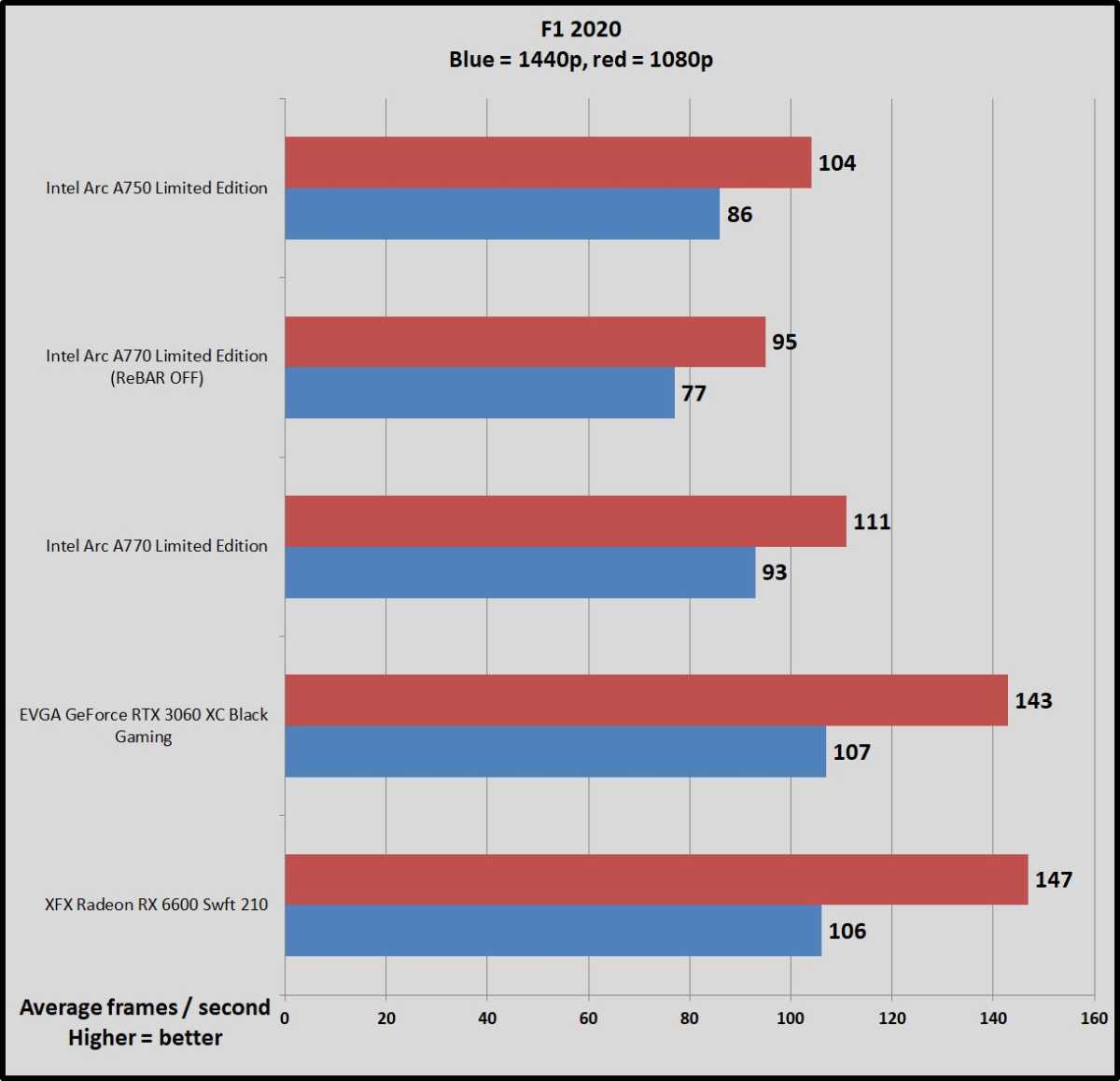

F1 2020

The latest in a long line of successful racing games, F1 2020 is a gem to test, supplying a wide array of both graphical and benchmarking options, making it a much more reliable (and fun) option than the Forza series. It’s built on the latest version of Codemasters’ buttery-smooth Ego game engine, complete with support for DX12 and Nvidia’s DLSS technology. We test two laps on the Australia course, with clear skies on and DLSS off.

Brad Chacos/IDG

We test with DX12 rather than the optional DX11 mode, but Arc’s performance still lags here. Much like Metro Exodus, it’s an illustrative result—when Arc stumbles, it can stumble hard. That said, while Arc isn’t as fast as its rivals in F1 2020, it still delivers fast, totally playable frame rates at both 1080p and 1440p, so there’s no reason to dismiss it outright.

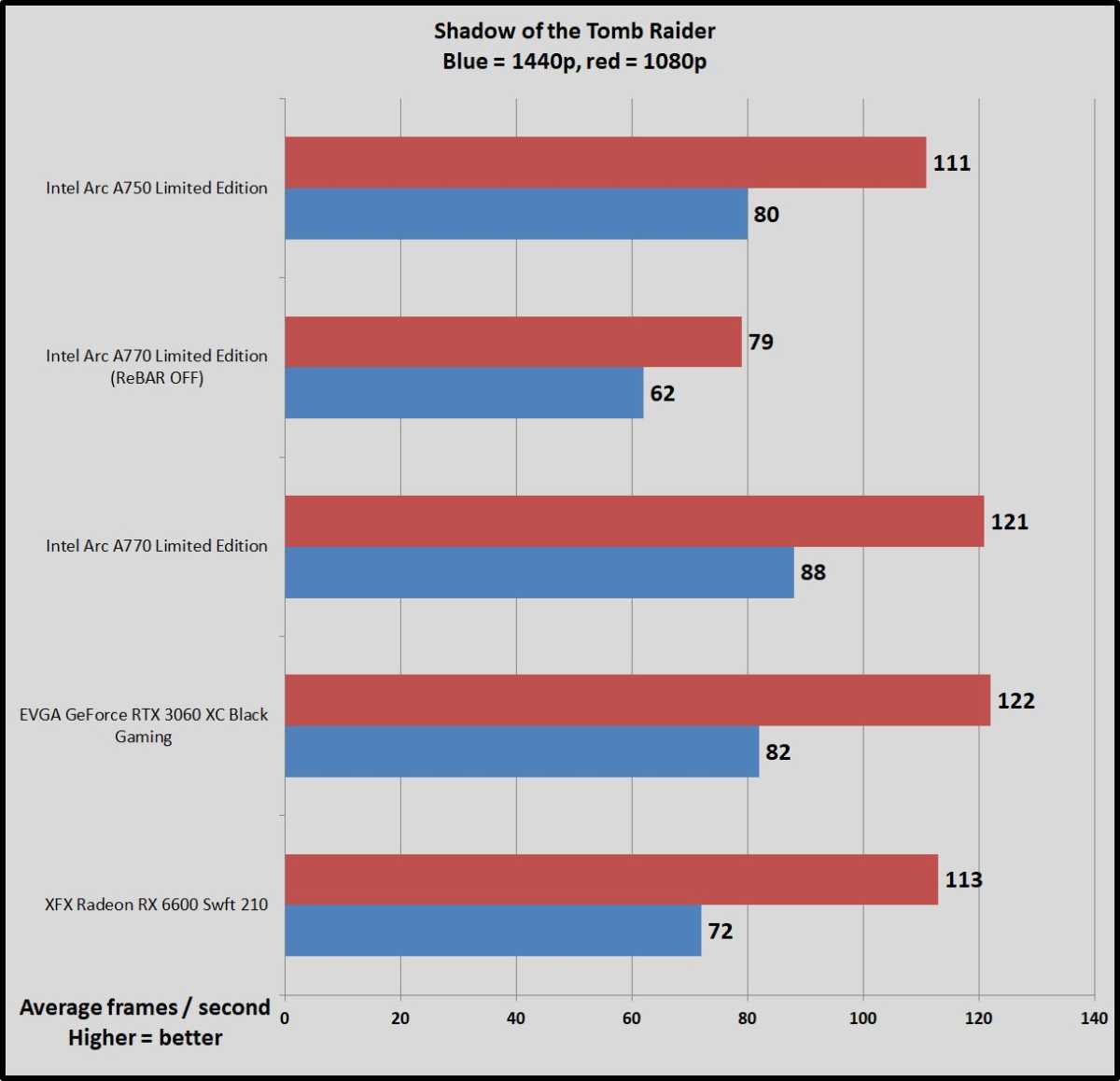

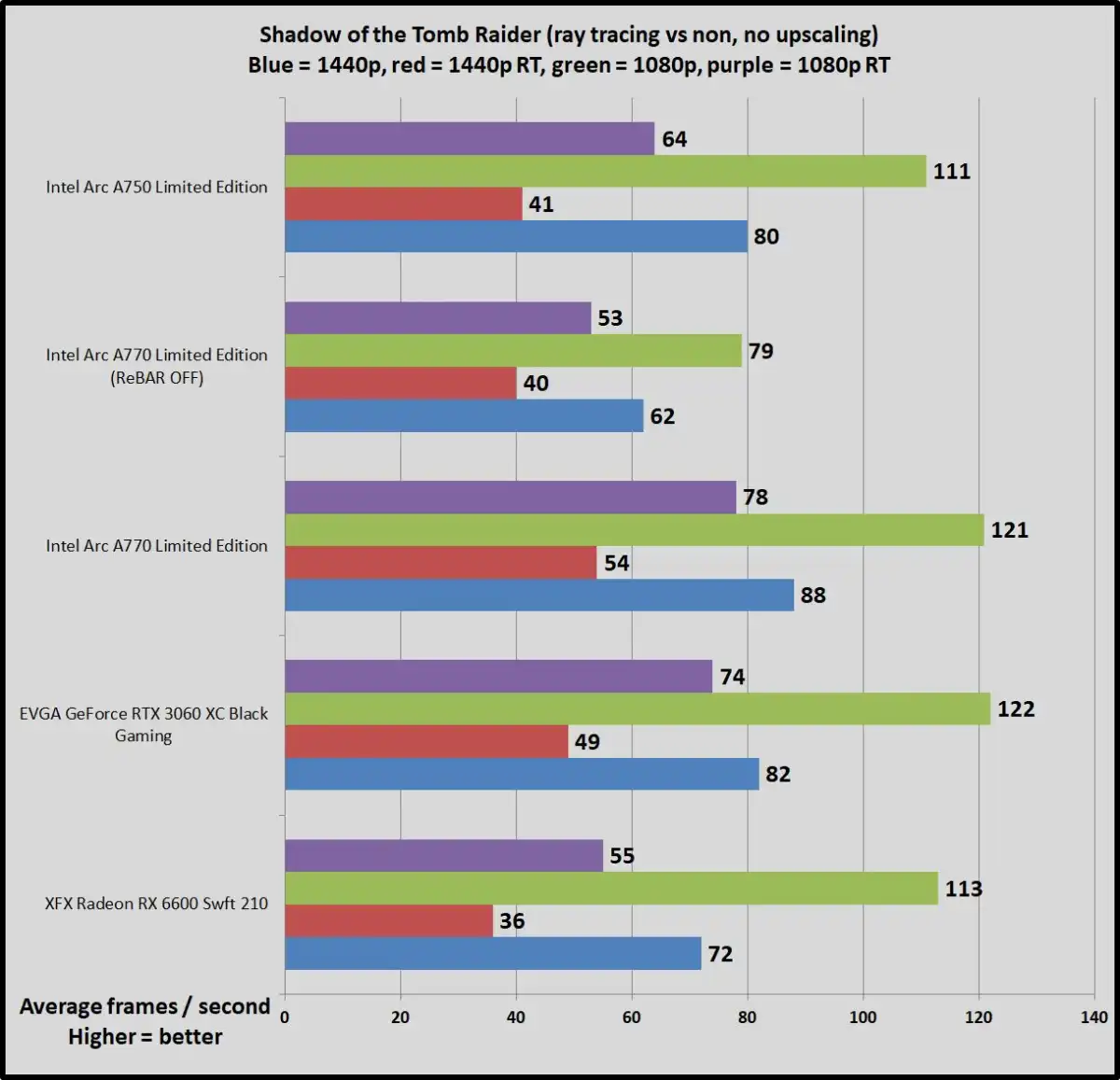

Shadow of the Tomb Raider

Shadow of the Tomb Raider concludes the reboot trilogy. It’s still utterly gorgeous and receives updates adding new features, like Intel’s XeSS. Square Enix optimized this game for DX12 and recommends DX11 only if you’re using older hardware or Windows 7, so we test with DX12. Shadow of the Tomb Raider uses an enhanced version of the Foundation engine that also powered Rise of the Tomb Raider and includes optional real-time ray tracing, DLSS, and XeSS features, among others.

Brad Chacos/IDG

Performance excels on Arc, matching the RTX 3060—as long as you have PCIe Resizable BAR on, that is.

Ray tracing performance

We also benchmarked the Arc A770 Limited Edition in a handful of titles that support cutting-edge real-time ray tracing effects: Cyberpunk 2077, Metro Exodus, Watch Dogs Legion, and Shadow of the Tomb Raider.

Legion packs ray-traced reflections, Tomb Raider includes ray-traced shadows, Metro features more strenuous (and mood-enhancing) ray-traced global illumination, and Cyberpunk 2077 packs in ray-traced shadows, reflections, and lighting alike.

We test these all with Ultra settings enabled, including the highest ray tracing options. Notably, we’re testing raw ray tracing performance here, not the boost provided by upscaling technologies like Nvidia’s DLSS, AMD’s FSR, and Intel’s own XeSS. All three of those can supercharge frame rates with minimal impact to visual quality, depending on the settings used, but they’re all dependent on integration from game developers, resulting in patchwork adoption. By testing frame rates with ray tracing on but upscaling technologies off, we can see the efficacy of the dedicated ray tracing cores themselves rather than muddying the water with AI upscaling that might not result in an apples-to-apples comparison.

Pay attention not just to the raw frame rates with RT on, but also at how big of a relative gap there is between RT off and RT on for the same GPUs. A lower relative gap means better overall RT core performance, and vice versa.

Brad Chacos/IDG

Brad Chacos/IDG

Brad Chacos/IDG

Brad Chacos/IDG

Surprise, surprise! Intel’s debut ray tracing implementation manages to outpunch even Nvidia’s vaunted second-gen RTX 30-series RT cores. Wow. If Intel manages to get XeSS running on more games—hundreds now include Nvidia’s DLSS—we might have a battle on our hands, though Nvidia’s new GeForce RTX 40-series delivers a massive ray tracing boost. AMD, meanwhile, has been doing good work for the entire industry with its vendor-agnostic FSR upscaling, but still clearly has more work to do on the raw ray tracing front.

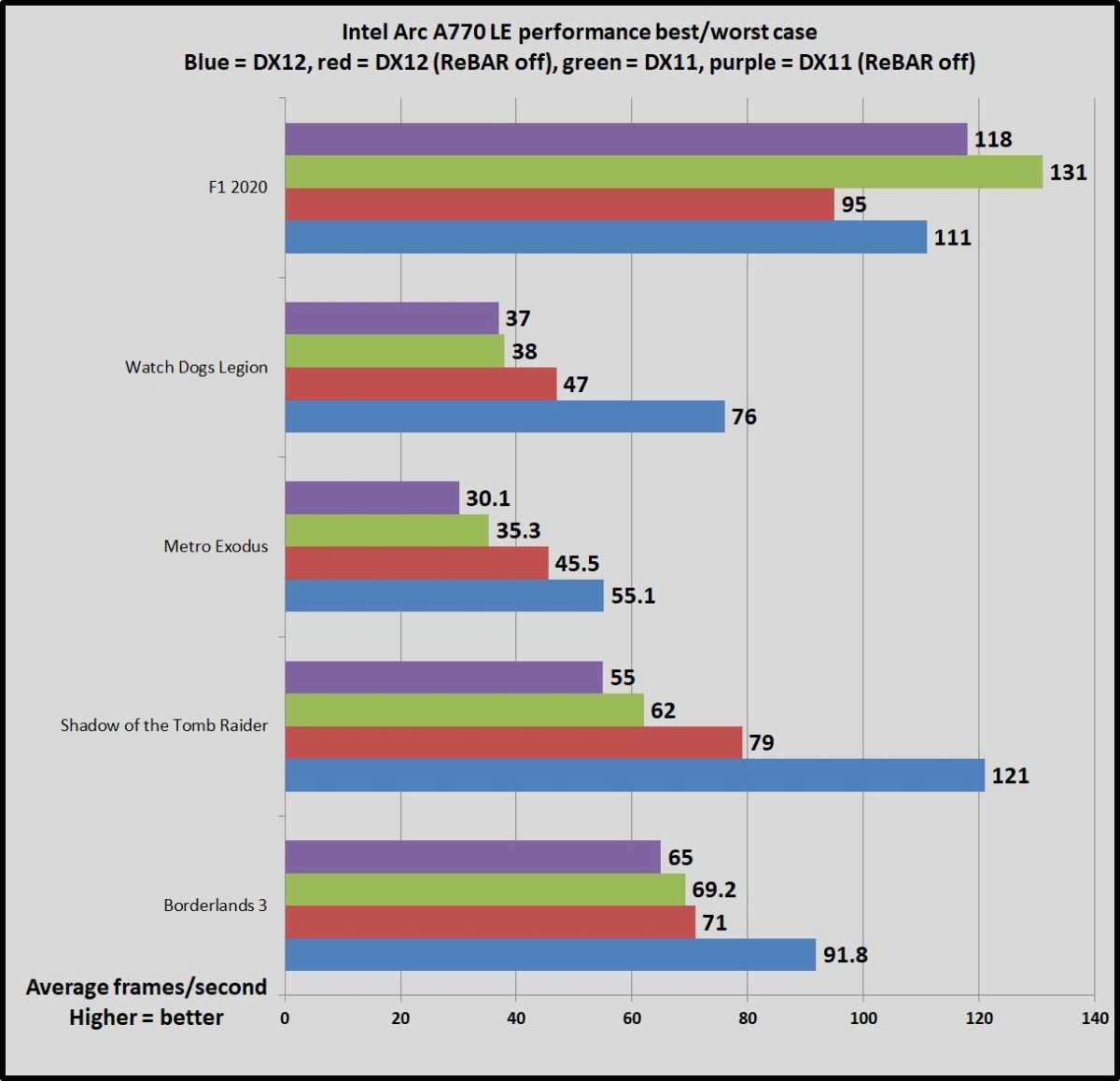

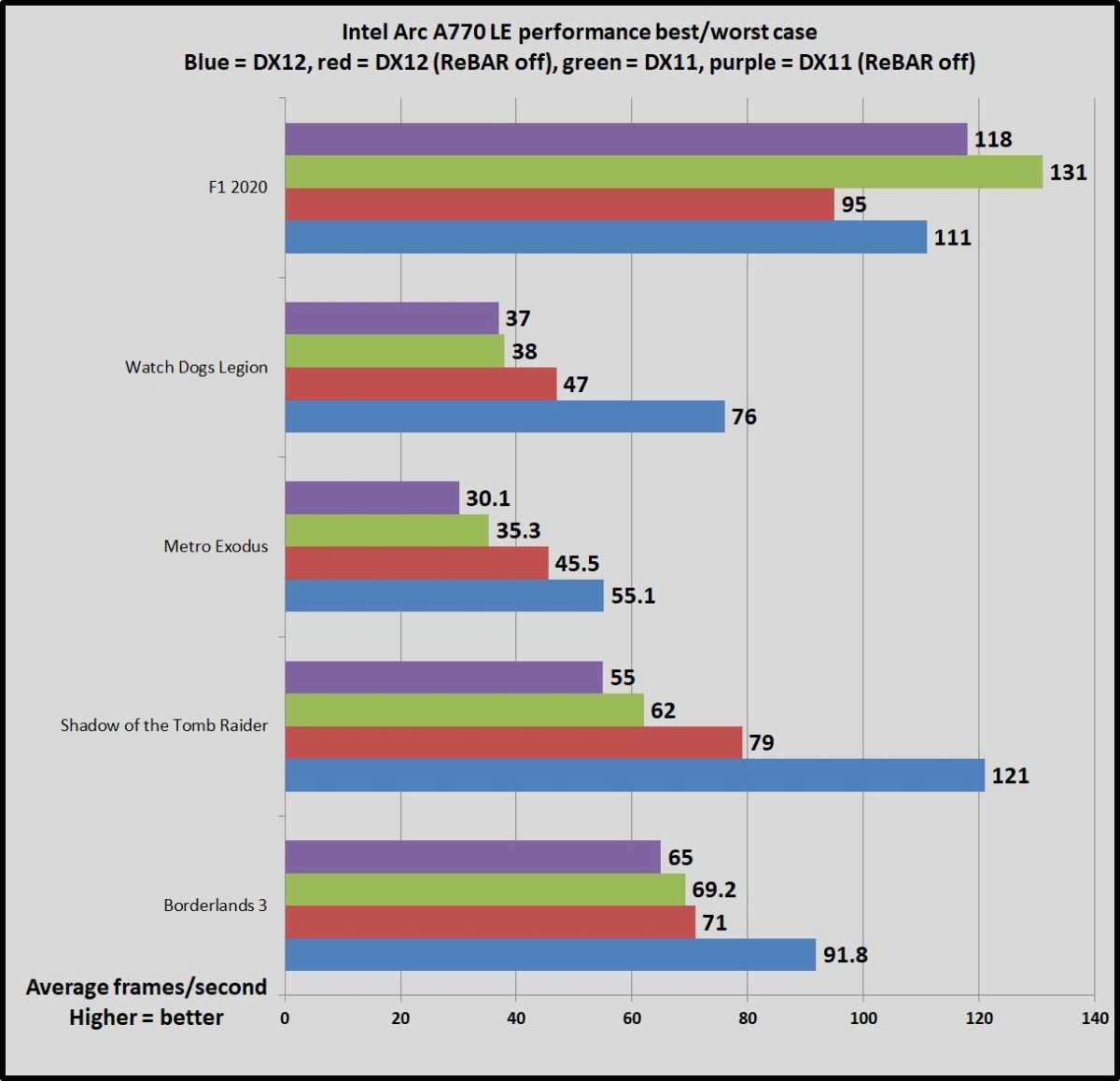

Intel Arc best case vs. worst case

Several of the games in our test suite can run in either DirectX 11 mode, or DX12 or Vulkan. Given Intel’s admitted weakness in DX11 performance, we took the time to benchmark the Arc A770 Limited Edition in both modes in those games, to showcase the performance difference. This helps illustrate Arc’s DX11 handling since our testing suite largely revolves around those newer graphics APIs.

We also benchmarked those same games again, in both DX11 and DX12/Vulkan, but this time with Arc’s crucial PCIe Resizable BAR support disabled, to reveal both the absolute best and absolute worst-case scenarios in those titles.

Brad Chacos/IDG

Weirdly, F1 2020 performs significantly better in DX11 mode, bucking expectations. But in general, there’s a staggering performance drop moving from DX12 to DX11, and a separate staggering performance loss if you have ReBAR off. In the absolute worst case above, Shadow of the Tomb Raider runs a whopping 55 percent slower on DX11 with ReBAR off than it does in DX12 with ReBAR on. Other games show performance losses ranging from 29 to 51 percent between their best and worst-case scenarios, F1 2020’s oddness excepted. Ooooof.

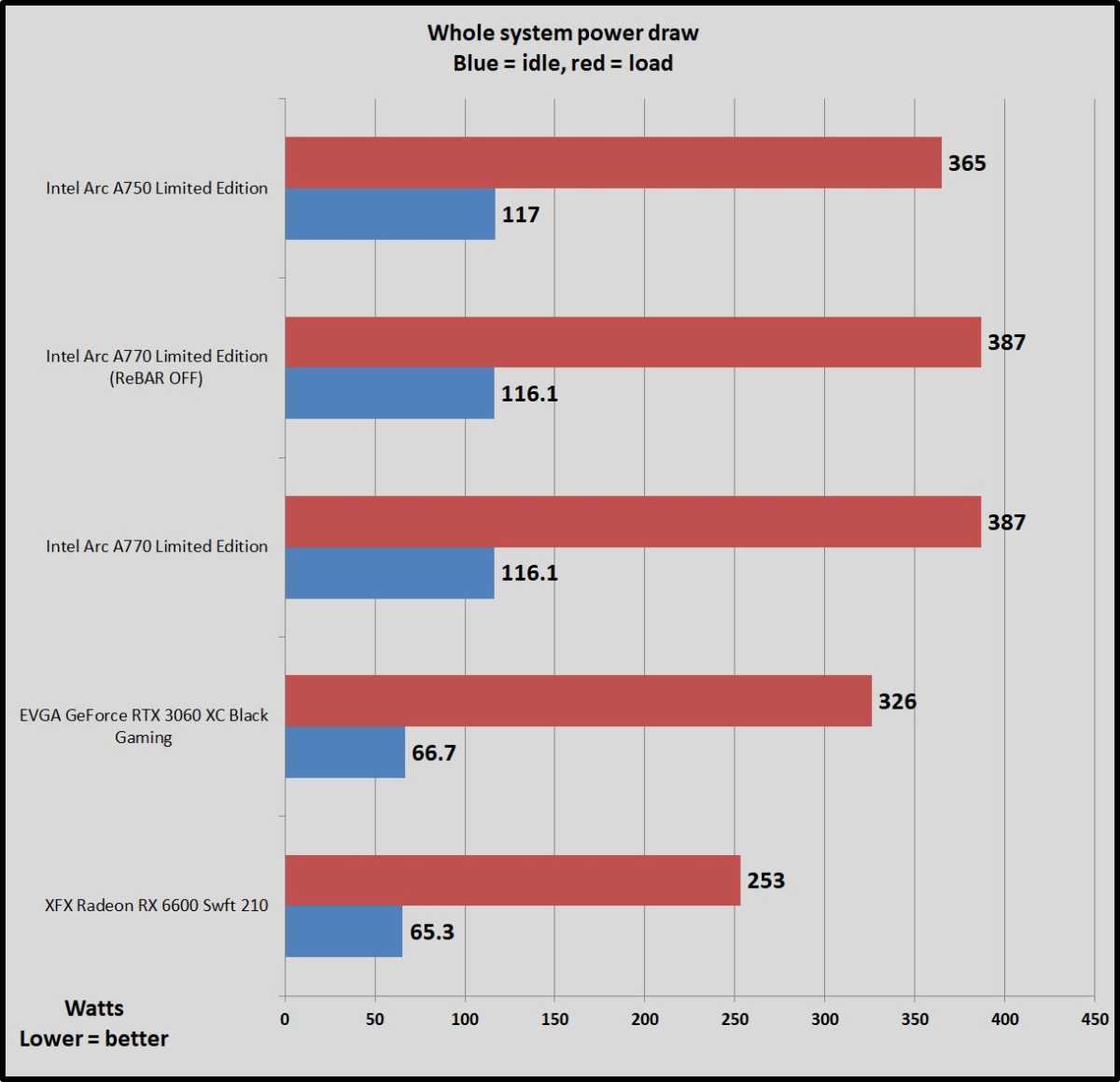

Power draw, thermals, and noise

We test power draw by looping the F1 2020 benchmark at 4K for about 20 minutes after we’ve benchmarked everything else and noting the highest reading on our Watts Up Pro meter, which measures the power consumption of our entire test system. The initial part of the race, where all competing cars are on-screen simultaneously, tends to be the most demanding portion.

This isn’t a worst-case test; this is a GPU-bound game running at a GPU-bound resolution to gauge performance when the graphics card is sweating hard. If you’re playing a game that also hammers the CPU, you could see higher overall system power draws. Consider yourself warned.

Brad Chacos/IDG

Intel’s Arc 7 GPUs sport a much larger die than its rivals, and it draws notably more power. That’s expected under load, but the high idle-power use also raises our eyebrows. Intel has work to do here with its next-generation “Battlemage” GPUs.

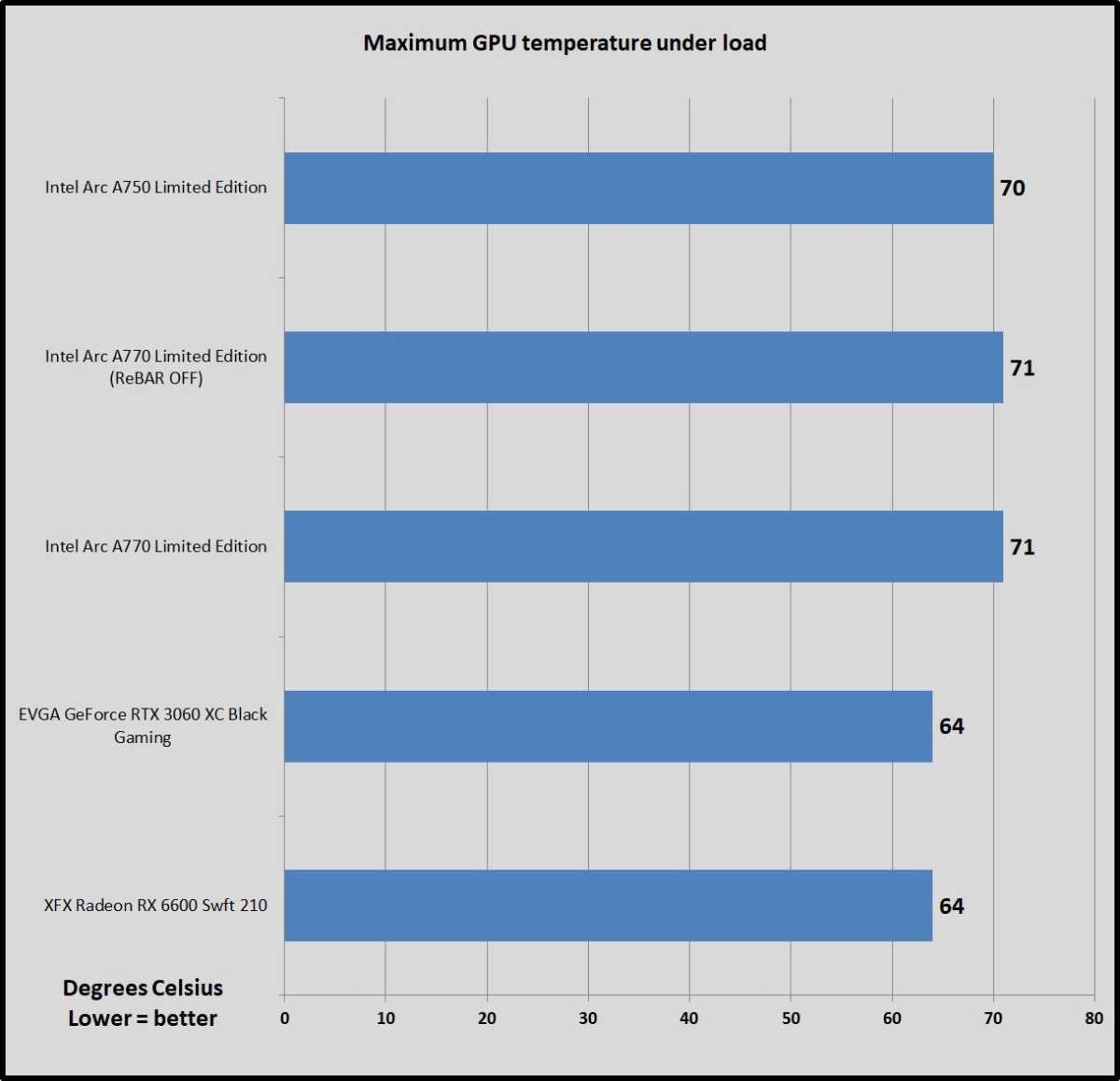

We test thermals by leaving GPU-Z open during the F1 2020 power draw test, noting the highest maximum temperature at the end. For Intel’s Arc GPUs, we needed to use the Arc Control software and HWiNFO instead, as GPU-Z did not yet recognize Intel’s temperature sensors.

Brad Chacos/IDG

Intel’s Arc Limited Edition cooler design meets the mark, however. It isn’t quite silent, but it is admirably quiet, and it tames those Xe HPG cores with aplomb. We did notice some very faint coil whine during game menus with the Arc A770 Limited Edition, however.

Should you buy the Intel Arc A770 and Intel Arc A750?

Intel launching its debut Arc A7 desktop graphics cards is a momentous event for the computer industry. After decades of duopoly, a new player has entered the GPU game. While Arc isn’t perfect, it’s good enough to provide hope for a more competitive future.

Brad Chacos/IDG

A big part of that comes down to pricing. Intel originally planned to launch Arc amid the ongoing graphics card shortage of the last two years. Driver woes delayed that launch, and now the floodgates are open. Rather than burying its proverbial head in the sand, Intel priced the Arc A750 and A770 very competitively for the current market—at least against the popular GeForce RTX 3060. AMD’s sturdy Radeon RX 6600 spoils that value proposition a bit if you’re simply looking for solid 1080p gaming.

There’s a lot to like with Arc. Intel’s Limited Edition cooler looks good and runs cool and quiet. Arc’s AV1 encoding performance blows away the competition. In games running DX12 or Vulkan, it can not only keep pace with the RTX 3060, it can sometimes absolutely crush Nvidia’s offering under its heel. Speaking of, Intel’s debut ray tracing implementation already topples the RTX 30-series’ RT cores and leaves Radeon GPUs eating dust, though DLSS’s widespread adoption gives Nvidia an overall edge there. Arc also shines at higher resolutions, with the A770 stretching its legs (and often, lead) at 1440p.

Arc stumbles hard if you don’t have PCIe Resizable BAR or need to use DX11.

Brad Chacos/IDG

But enough quirks and oddities remain that its difficult to recommend Arc except in very niche circumstances. Intel admits that Arc’s DirectX 11 performance lags behind its rivals, and our testing showed that gulf to be significant. Most games still run on DX11. On top of that, you’ll need a modern system with support for PCIe Resizable BAR, or you’ll leave another huge chunk of performance on the table. Arc sucks down considerably more power than its Nvidia and AMD rivals, even when idling. And while Intel’s drivers are in much better shape than they were months ago, and thousands of engineers are squashing bugs and improving performance as quickly as possible, we still encountered several crashes and other software-related woes that you simply don’t see with GeForce or Radeon GPUs these days.

If you don’t care about ray tracing, AMD’s Radeon RX 6600 remains our go-to pick for 1080p gaming, delivering frame rates well in excess of 60fps even at Ultra settings in most games. It uses considerably less power, has considerably more stable drivers, and AMD’s killer Radeon Super Resolution tech can increase frame rates even further in almost every game (albeit with less fidelity than DLSS, XeSS, or AMD’s FSR 2.0). You can usually find it going for $250 to $260 at retailers these days. If you want even more oomph, the step-up Radeon RX 6600 XT at roughly $300 is noticeably faster than the RX 6600 and Arc alike at 1080p while still costing less than Arc, but it’s less ideal for 1440p gaming.

AMD’s Radeon 6000-series graphics cards aren’t good at ray tracing, however. If you want to melt your face with those cutting-edge lighting effects, you’ll want to look elsewhere. Arc’s raw ray tracing horsepower actually surpasses Nvidia’s vaunted RT cores—but those cores are just part of Nvidia’s “RTX” stack, which refers to hardware and software alike. Turning on ray tracing nukes frame rates; you need an upscaling solution like DLSS or XeSS to claw back that performance. Right now, DLSS is supported in hundreds of games, while XeSS is still getting off the ground in a handful of titles. Sure, Intel’s raw ray tracing performance bodes well for the future and is impressive in a vacuum, but until XeSS (or AMD’s vendor-agnostic FSR 2.0) becomes more widely available, Nvidia’s DLSS adoption gives the GeForce RTX 3060 the no-brainer practical advantage despite its higher street price. It’s also a solid 1440p gaming option.

Brad Chacos/IDG

That’s not to say Intel’s Arc deserves to be shunned. They are oddball GPUs, but largely good ones (if you don’t mind random instability). If you mostly play new, triple-A games built on DX12 or Vulkan, the Arc A750 and A770 can far outpace its rivals in many situations, for a far lower price than the RTX 3060. In those best-case scenarios, Arc truly delivers outstanding value. And if you want AV1 encoding for a reasonable price—it is that much of a game-changer for YouTube video creators—Arc will likely be your only option for the next five or six months.

I have no doubt that Intel’s engineers will keep whipping Arc’s performance into shape, especially if the company funnels most of its GPUs into prebuilt systems that give Intel more complete control over the entire system (and ensure ReBAR activation), but you never want to buy hardware on future promises. Arc’s debut performance is a mixture of staggering highs and painful caveats, punctuated by work-in-progress drivers. Most gamers will be better off opting for the more polished experience provided by Nvidia and AMD, but the Arc A750 and A770 provide compelling hope for a more competitive future—something the graphics card market desperately needs after two years of shortages and GPUs going for $1,600. Welcome to the new era.

Editor’s note: This review originally published on October 5, but was updated October 12 to include buying information and insights from our GeForce RTX 4090 review.